A Step-by-Step Guide to Data Engineering CI/CD with Microsoft Fabric

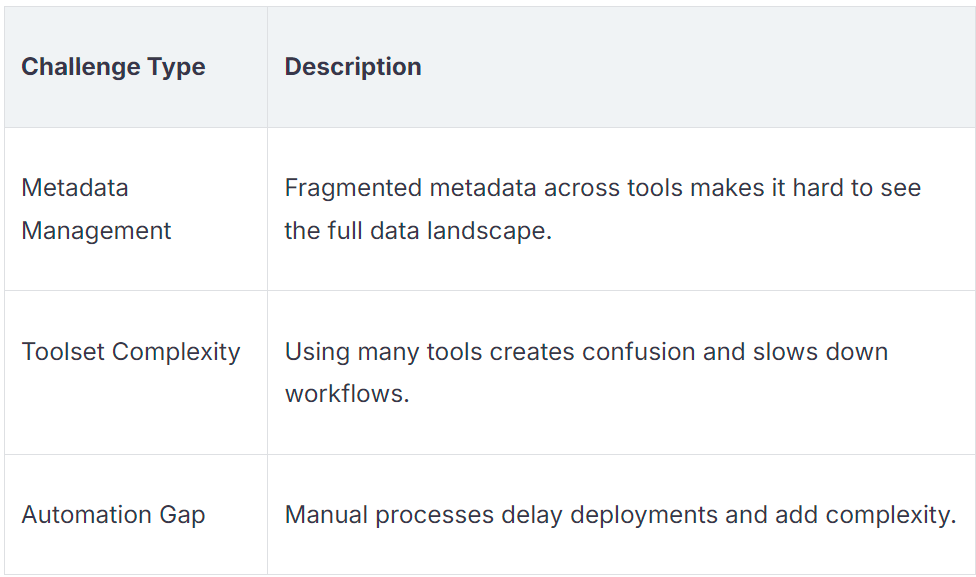

You want a practical way to manage Data Engineering projects in Microsoft Fabric. Many teams face challenges like fragmented metadata, toolset complexity, and automation gaps.

When you connect Lakehouse, Spark Notebooks, Warehouses, Data Pipelines, Semantic Models, and Power BI under Git and CI/CD, you boost collaboration, streamline deployments, and aut…