How AI Is Shaping the Future of Data Security Protections

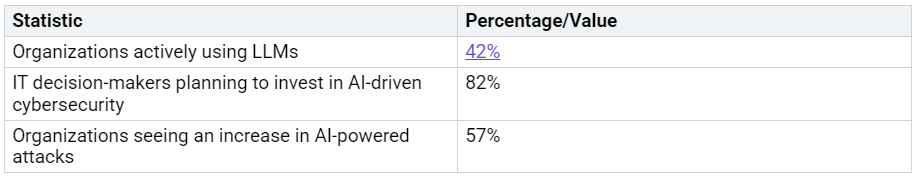

AI is changing how you keep your data safe. Many companies now use AI to protect important information. The dangers are also getting bigger. For example, 74% of IT workers say AI threats are a big problem. Phishing attacks have gone up by 1,265% because of generative AI. You need to act fast to protect your data.