How to Manage Data Efficiently in Microsoft Fabric Lakehouse with Spark SQL

Efficient data management is very important today. Many people rely on data. 25,000 organizations use Microsoft Fabric Lakehouse. This includes 67% of the Fortune 500 companies. This shows a clear need for good solutions. Spark SQL helps you manage data better in many ways:

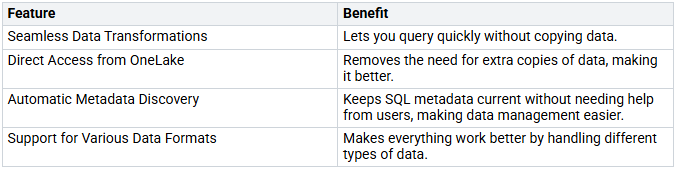

By learning these tools, you can improve your data work and get useful insights f…