Securing important data in AI tools is very important. A huge 68% of organizations have had data leaks with AI tools. This shows why strong data rules are needed. Microsoft 365 Copilot helps you work better, but it also brings up worries about data safety. You need to balance working faster and keeping sensitive information safe. Trusted AI can help you find this balance. It offers features that improve data rules while making work easier.

Key Takeaways

Know the risks of sharing data when using Microsoft 365 Copilot. Sharing too much and having weak permissions can cause sensitive information to leak.

Use strong data protection methods like Double Key Encryption. Clearly define and label sensitive information to make security better.

Set clear rules for using AI outputs. Make these rules fit legal needs. Ensure all employees know how to use AI safely.

Watch AI interactions closely to stop unauthorized access. Use tools like Data Loss Prevention and compliance checks to keep data safe.

Train users on AI rules. Teaching employees about data security risks helps lower problems and encourages responsible AI use.

Data Security in Microsoft 365 Copilot

Understanding Data Exposure Risks

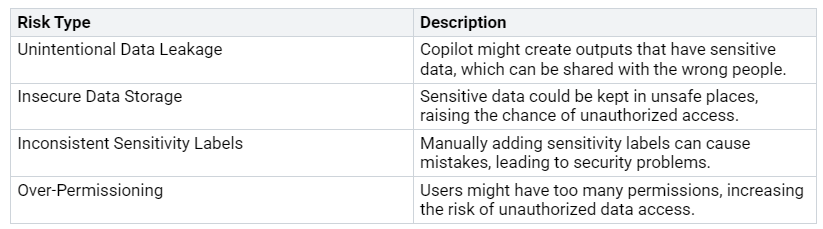

When you use Microsoft 365 Copilot, you need to know about data exposure risks. Oversharing and weak permissions can create big problems. For example, if you share documents too widely, sensitive information might go to the wrong people. This can happen when Copilot makes outputs that accidentally include sensitive data.

Here are some common data exposure risks with Microsoft 365 Copilot:

Organizations have lost data and faced insider threats because of these issues. For instance, over 3% of sensitive business data was shared across the organization without following sharing rules. This shows there are serious data leakage risks with Microsoft 365 Copilot. Also, if a user can see sensitive information, Copilot gets that access too, which can expose confidential data.

Implementing Data Masking Strategies

To keep sensitive information safe in Microsoft 365 Copilot, you should use good data masking strategies. One good method is Double Key Encryption (DKE). This method uses two keys for access: one key stays with Microsoft, and the other is with your organization. This keeps sensitive data secure and only allows authorized people to see it.

Here are some key strategies for data masking:

Clearly define sensitive data: Identify and sort sensitive information like personally identifiable information (PII), financial data, and intellectual property.

Find and list sensitive data: Know how sensitive data moves inside and outside your organization.

Standardize classification labels: Use common terms for classification to improve data handling.

Check access controls: Make sure sharing practices are right for user groups.

Use change management processes: Keep employee access and data policies updated.

Set up policy life cycle management: Regularly update data protection policies to meet changing needs.

By using these data security measures, you can greatly lower the risk of data exposure and improve the overall security of your organization while using Microsoft 365 Copilot.

Governance for Microsoft 365 Copilot Outputs

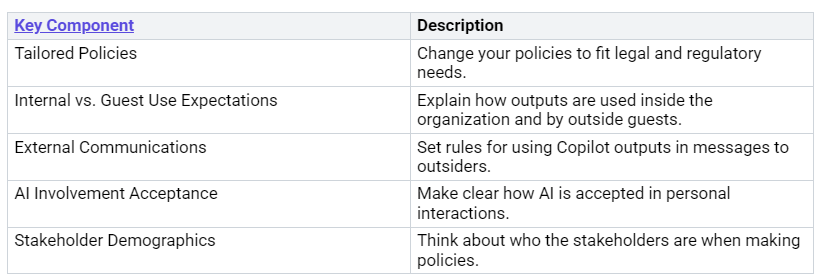

Establishing Clear Usage Policies

To manage Microsoft 365 Copilot outputs well, you need clear usage policies. These rules show how your organization uses AI-made content. They help reduce risks and make sure you follow laws and rules. Here are some important parts to think about when making these policies:

By using these specific policies, you can lower legal and business risks. Stay updated on laws about AI-made content. Explain when and how workers can use AI or AI-made content. Talk about how workers must ensure AI use is safe and helpful.

Monitoring AI Interactions

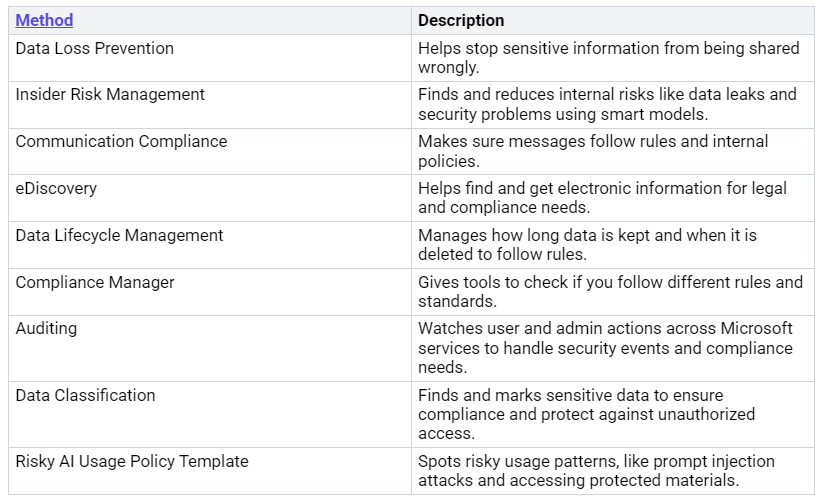

Watching AI interactions is very important for managing Microsoft 365 Copilot outputs. You need to see how users work with AI to make sure they follow rules and protect sensitive data. Here are some good ways to monitor:

Watching AI interactions has many benefits for data management. It helps you follow governance rules and responsible AI guidelines. This builds trust with users and gives insights into sensitive data through discovery and classification. By improving data integrity and confidentiality, you raise overall data quality.

Security and Compliance Strategies

Integrating Security Tools

Using security tools with Microsoft 365 Copilot makes your organization’s security stronger. You can use different Microsoft security products like Sentinel, Intune, Purview, and Defender. These tools work well together to protect against threats. Also, working with outside companies like Shodan, CyberArk, CrowdSec, Quest, and Darktrace can make your security even better.

Here are some key benefits of using security tools:

Continuous Monitoring and Auditing

Keeping an eye on things and checking regularly is very important for security and compliance in Microsoft 365 Copilot. You should follow best practices to keep your organization safe from risks. Here are some good strategies:

Use real-time data from Microsoft tools like Power BI and Application Insights to create custom reports for your organization.

Make sure users have a Microsoft 365 license and set up Microsoft Sentinel to collect audit logs from Microsoft Purview.

Regularly check user permissions and security settings to find and fix any problems quickly.

Use role-based access control to give permissions based on what users do.

Turn on multi-factor authentication for extra security when accessing sensitive data in SharePoint.

Set up monitoring and alerts to catch any strange or unauthorized access attempts.

Using Copilot’s built-in analytics dashboard helps you track agent usage and important performance indicators. Admins can also get alerts about agent activities through Microsoft Sentinel. Plus, Copilot audit logging to Microsoft Purview records actions and activities in the Copilot environment, ensuring everything is clear and accountable.

By using these security and compliance strategies, you can keep sensitive data safe and create a secure environment while getting the most out of Microsoft 365 Copilot.

User Training for Responsible AI Use

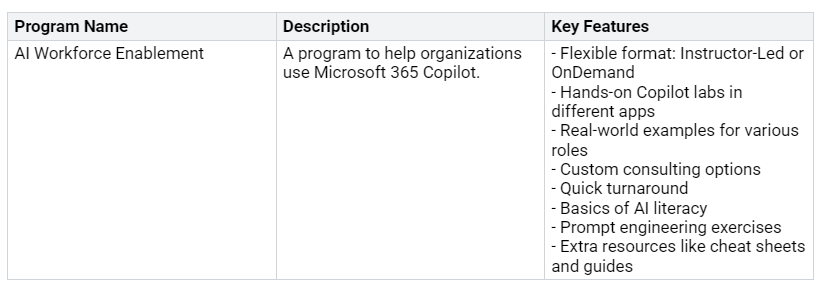

Training Employees on AI Governance

Teaching your employees about AI governance is very important. It helps keep security and compliance in Microsoft 365 Copilot. When you inform your team about data security rules, they learn about the risks of using AI tools. This knowledge can greatly lower security risks. Here are some good training programs for your organization:

You can also find many resources to help your team learn about AI governance:

Become a Service Adoption Specialist: Link

Coffee in the Cloud tutorials: Link

Developer training: Link

Foundational user training: Link

IT Pro training: Link

Microsoft 365 Champion Program: Link

Microsoft 365 Quick Start videos: Link

Microsoft Community Learning: Link

Microsoft Learn: Link

Change Management Strategies

Using AI responsibly needs good change management strategies. You can help people accept change by focusing on clear communication and support. Here are some strategies to think about:

Effective Communication: Change messages for different teams. Focus on what matters to them and ease their worries about AI.

Establish AI Ambassadors: Form a group of excited employees to share new AI solutions and get feedback from others.

Phased Training: Make changes step by step. This helps employees and customers feel comfortable and confident.

Leverage Vendor Support: Work with AI partners for advice on best practices and responsible AI use.

Support from leaders is very important too. When leaders show they back AI projects, it creates a positive atmosphere. Offering training and reskilling programs can turn resistance into excitement. Clear messages about AI’s role help reduce fears and encourage acceptance. Addressing ethical concerns directly sets guidelines that can help ease worries about using AI.

By investing in training and change management, you help your employees use Microsoft 365 Copilot responsibly. This not only boosts productivity but also protects sensitive data and ensures compliance with governance rules.

To improve the security and governance of Microsoft 365 Copilot, you can use some important strategies. First, do regular checks on access, user permissions, and lifecycle management. Use sensitivity labels to keep sensitive data safe and think about using third-party tools for better visibility.

Taking a proactive approach to data security and AI governance helps your organization find where sensitive data is stored. It also makes sure governance matches business goals. This method makes operations smoother and boosts productivity while following rules.

For next steps, check out the Knostic Copilot Readiness Assessment. This assessment will show you where sensitive data might be overshared and point out permission gaps. Create a governance plan, use sensitivity labels, and do regular checks to reduce risks effectively.

FAQ

What is Microsoft 365 Copilot?

Microsoft 365 Copilot is a tool that uses AI to help you work better. It adds AI features to Microsoft 365 apps. It helps you make content, look at data, and automate tasks. This makes your work faster and easier.

How can I secure sensitive data in Copilot?

To keep sensitive data safe in Copilot, use data masking methods, apply sensitivity labels, and set strict access rules. Check permissions often and watch how users interact to stop unauthorized access.

What are the key governance policies for AI outputs?

Important governance policies for AI outputs include setting usage rules, creating data handling steps, and following legal rules. Update these policies regularly to meet new needs.

Why is user training important for AI governance?

User training is very important for AI governance. It teaches employees about data security risks and how to use AI correctly. When users are informed, they can better protect sensitive information and follow company rules.

How can I monitor AI interactions effectively?

You can keep an eye on AI interactions by using tools like Data Loss Prevention, compliance checks, and real-time alerts. These methods help you watch user actions and make sure they follow governance rules.