In today’s world, governance, reliability, and responsible deployment are very important for AI systems, especially when utilizing platforms like Azure AI Foundry. These components ensure your AI solutions adhere to ethical guidelines and operate effectively. Industry reports indicate that 49% of leaders recognize the necessity for workers to acquire better skills for ethical AI, highlighting the demand for training. More organizations are developing formal AI governance plans to address complex ethical challenges. Micah Altman from MIT notes that there is a growing consensus on the urgent need for ethical AI design. This shift reflects an increasing awareness of the significance of ethics in AI development.

Key Takeaways

Set up good AI rules using guides like the EU AI Act and OECD AI principles. This helps make sure development is fair.

Use trust and safety steps in Azure AI Foundry. This stops misuse and helps customers feel safe.

Keep an eye on AI systems all the time. Use tools like Azure Monitor to check how they work and stay reliable.

Talk to the community to get their thoughts. This helps make sure AI projects match local values and needs.

Think about fairness by making clear rules. These rules should cover fairness, openness, and responsibility in AI use.

Governance in Azure AI Foundry

Frameworks for AI Governance

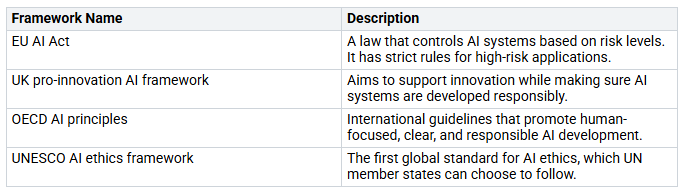

Good governance is very important for using AI systems well. In Azure AI Foundry, there are many frameworks that help organizations set up strong governance practices. These frameworks guide you through the tricky parts of AI development. They also help you follow ethical rules. Here are some well-known frameworks for AI governance:

These frameworks help organizations create their governance plans. They stress the need for being clear, responsible, and ethical in AI development. By using these frameworks, you can make sure your AI solutions meet industry standards and what society expects.

Trust and Safety Measures

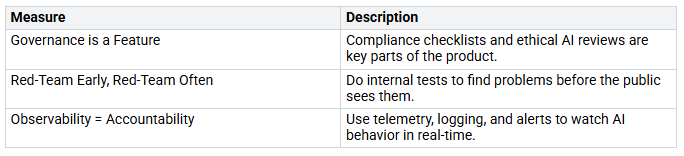

Trust and safety are very important when using AI systems. Azure AI Foundry has many ways to stop misuse of AI models and ensure responsible use. Here are some key trust and safety measures:

Besides these measures, Azure AI Foundry uses advanced methods to improve safety. For example, it checks and sorts text by seriousness to give proper responses. Groundedness detection makes sure AI answers come from trusted sources. Also, prompt shields help stop attempts to trick safety rules.

Organizations that focus on responsible AI practices can build customer trust, attract skilled workers, and reduce risks. The success of Azure AI Foundry’s trust and safety measures shows in the high rate of safe evaluations for self-harm and sexual content risks. However, there is still work to do in areas like violence and hate/unfairness. Regular checks and audits of AI applications for performance and rules are key to keeping trust.

By using these governance frameworks and trust measures, you can create a safer and more dependable AI environment. This not only protects your organization but also encourages responsible and ethical AI development.

Reliability and Security of AI Systems

Reliability and security are very important for AI systems to succeed. You need to make sure your AI applications work well and safely. This part will look at key reliability measures and the security features that Azure AI Foundry offers.

Key Reliability Metrics

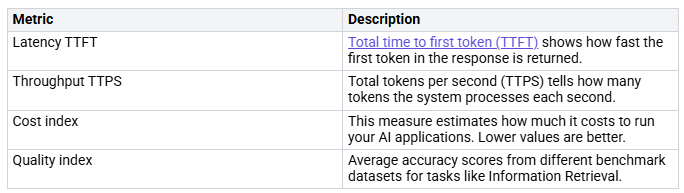

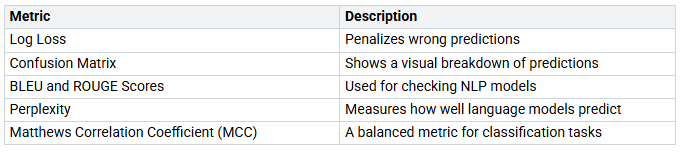

To check how reliable AI systems are, focus on some key measures. These measures help you see how well your AI models work in real life. Here are some important reliability measures to think about:

Ethical and Safety Metrics: Use the “3H” framework. It stands for Honest, Helpful, and Harmless. This framework checks if your AI acts ethically and safely.

Technical Robustness Metrics: These include precision and recall. They measure how good your model’s predictions are.

Tool Call Accuracy: This measure checks how well the agent uses tools during its tasks.

Intent Resolution: See if the agent understands what the user wants correctly.

You must keep an eye on AI applications after they are deployed. You should track how they perform and fix problems quickly. This helps keep user trust and makes sure your AI systems work well all the time.

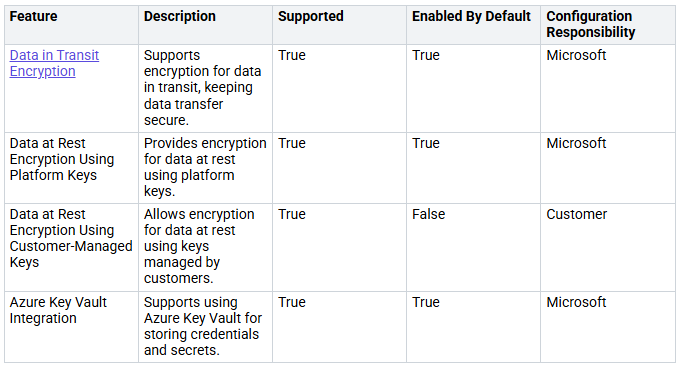

Security Features in Azure

Security is very important when using AI systems. Azure AI Foundry has strong security features to keep your applications safe from threats. Here are some special security features you should use:

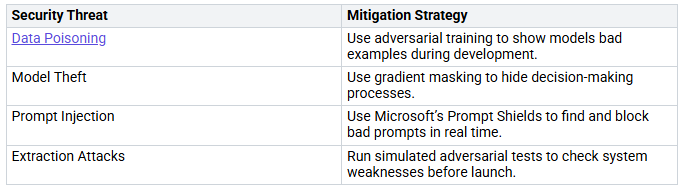

You also need to know about common security threats that AI systems face. Here are some common threats and how Azure AI Foundry deals with them:

Microsoft highlights the need to use Zero Trust principles and responsible AI practices at every step of AI application development. This means checking identity and context, using limited access controls, and being ready for breaches with active monitoring.

By focusing on these reliability measures and security features, you can improve the performance and safety of your AI systems in Azure AI Foundry. This not only protects your business but also builds trust with your users.

Responsible Deployment Practices

Ethical Considerations

When you use AI systems, think about ethics first. Setting up ethical rules helps define important ideas like fairness, honesty, privacy, and safety. Here are some important guidelines to follow:

Make rules to ensure responsible use and development of AI systems.

Work with global groups to create standards and certification steps. This includes data management and how algorithms work.

Have independent groups check AI applications and enforce rules.

Share clear information about how AI systems are designed, trained, and make decisions.

Azure AI Foundry helps with these ethical rules through its governance tools. It provides resources to apply governance policies. This makes sure ethical standards are followed in all AI projects. Transparency features explain how models work, find biases, and check fairness. This improves accountability and understanding of AI systems.

Community Engagement

Getting the community involved is key for responsible AI use. You should build trust by keeping good relationships and communicating often. Here are some best practices for community involvement:

Equity: Include different voices and be aware of cultural differences in governance.

Accountability: Keep regular communication and feedback options open.

Transparency: Be honest about AI limits and project updates.

Codesign: Involve different people in the design process and ask for feedback.

Value alignment: Make sure AI goals match community values through real conversations.

Community feedback has greatly shaped responsible AI practices in Azure AI Foundry. Transparency keeps users informed about how the system works. Providing educational materials helps users understand the system’s strengths and weaknesses. Having feedback channels lets users share their thoughts and report problems, which are then looked at to improve the system.

By focusing on ethics and community involvement, you can create a responsible deployment plan that boosts the performance and safety of your AI systems in the cloud.

Observability and Monitoring in Azure

Importance of Observability

Observability is very important for managing AI systems in Azure AI Foundry. It gives you real-time information about your AI applications. This helps you keep an eye on and check your systems. You can make sure they are clear and follow rules during their use. Here are some main benefits of observability:

Transparency: Observability lets you see how your AI applications work.

Compliance: It helps you follow company and ethical rules.

Continuous Evaluation: You keep control over your AI systems by watching them all the time.

The observability framework in Azure AI Foundry is more than just a tool for checking problems. It is key for creating trustworthy and effective generative AI applications. By using these features, you can make sure your AI systems work well and meet your goals and ethical rules.

Monitoring Tools

Azure AI Foundry has many monitoring tools to help you watch how your AI systems perform. These tools let you find problems quickly and improve your applications. Here are some important monitoring tools you can use:

Azure AI Foundry Agent Service dashboards: These give a visual view of your AI system’s performance.

Azure Monitor platform metrics: This tool helps you track different performance measures.

Azure Monitor metrics explorer: Use this to look at and study your metrics closely.

Azure Monitor REST API for metrics: This lets you get metrics through programming.

Azure Monitor alerts: Set up alerts to warn you about any performance problems.

With these monitoring tools, you can boost the performance and safety of your AI applications. They help you stay ahead by making sure your systems are dependable and effective.

Real-World Lessons from Azure AI Foundry

Case Studies in Governance

You can learn important lessons from companies that used governance in Azure AI Foundry. Here are two great examples:

Heineken: This big brewery made the ‘Hoppy’ AI assistant on Azure AI Foundry. They included rules from the start. This lets workers in 70 countries get insights safely in their own languages. This saved a lot of work hours.

Fujitsu: This tech company made sales proposals easier with an AI agent. By connecting the agent safely to their knowledge bases and Microsoft 365 tools, they boosted productivity by 67%.

These examples show how important it is to include governance in AI projects from the start. They prove that good governance can lead to big improvements in how a company operates.

Success Stories in Reliability

Many companies have done well in reliability and deployment using Azure AI Foundry. Here are some standout examples:

Ontada: This healthcare data company cut data processing time by 75%. They turned 70% of unstructured data into useful insights, which helped them make better decisions.

H&R Block: This tax service improved efficiency by automating customer responses. This made customers happier and saved a lot of money.

These success stories show how Azure AI Foundry can improve performance and reliability. Businesses trust this platform for its ability to grow and follow rules. It has high ratings for reliability and easy deployment on sites like Gartner Peer Insights.

To measure success in these examples, companies often use different metrics. Here’s a summary of some key metrics:

By learning from these real-world examples, you can see how important governance and reliability are in your own AI projects.

Future Directions for Continuous Governance

Evolving Standards

As AI technology changes, the rules for using it change too. Microsoft has earned the ISO/IEC 42001:2023 certification. This is a worldwide standard for managing AI systems. It shows that Microsoft cares about using AI responsibly, safely, and openly. You can expect to see some important governance controls:

Careful checking of AI models to make sure they give ethical results.

Rules that follow industry-specific laws, which help lower risks.

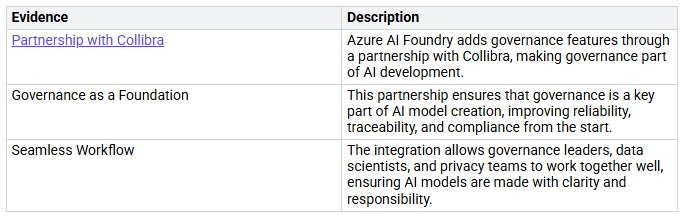

Azure AI Foundry includes these changing standards in its development process. For example, it works with Collibra to add governance features right into AI development. This partnership makes sure that governance is a key part of creating AI models. It improves reliability, traceability, and compliance from the start. The integration helps governance leaders, data scientists, and privacy teams work together well, making sure AI models are clear and accountable.

Community Involvement

Getting the community involved is very important for future governance. Working with different groups helps create AI rules and ethical guidelines. Countries like Australia, Brazil, Canada, the EU, Israel, and the UK have successfully included stakeholders in their AI laws through discussions and participatory methods. Here are some examples of community-driven projects:

The air pollution monitoring project in Pittsburgh let the community gather air pollution data for local action.

The Smell Pittsburgh Project asked citizens to report pollution smells, using the information for air pollution research.

The RISE project allowed citizens to mark industrial smoke emissions, creating an AI model to identify pollution events.

Community-based organizations (CBOs) offer important insights into the needs and challenges of local people. Their involvement makes sure that AI solutions are culturally aware and useful. Ongoing feedback from the community helps organizations change their programs to better meet their needs.

By focusing on changing standards and community involvement, you can help create a more responsible and effective AI governance system.

Putting together governance, reliability, and responsible deployment in AI systems is very important for success. You should focus on ideas like being open, responsible, and able to check things. These parts help create responsible AI systems. Setting up AI governance groups makes sure there is ethical control during the whole AI process.

To keep getting better, think about these best practices:

Clearly define who is in charge and use a RACI model for responsibility.

Allow ongoing observability to watch performance measures.

Do live red-team drills to find risks.

Keep audit-ready logs for following rules.

Working with the community helps build a culture of responsibility. By using these practices, you can improve how well your AI projects work in the cloud and make sure your use of Azure AI Foundry meets ethical standards.

FAQ

What is Azure AI Foundry?

Azure AI Foundry is a platform in the cloud. It helps you create, launch, and manage AI apps. You can use its tools to make trustworthy and fair AI solutions.

How does governance work in Azure AI Foundry?

Governance in Azure AI Foundry includes rules and frameworks. These make sure your AI systems follow ethical standards. You can add trust and safety steps to improve responsibility.

Why is reliability important for AI systems?

Reliability makes sure your AI apps work well all the time. It helps keep users trusting your system. You can check reliability using measures like accuracy and response time.

What are some ethical considerations for AI deployment?

When using AI, think about fairness, openness, and privacy. You should set rules for responsible use. Working with the community can also help match your AI goals with their values.

How can I monitor my AI applications effectively?

You can use Azure’s monitoring tools to watch performance. These tools give real-time information about your AI systems. Regular checks help you find problems and boost reliability.