Opening – Hook + Setup

You’re still using the default On‑Premises Data Gateway settings. Fascinating. And you wonder why your Power BI refreshes crawl like a dial‑up modem in 1998.

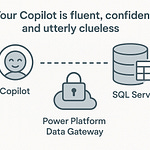

Here’s the news you apparently skipped: the Power Platform doesn’t talk directly to your databases. It sends every query, every Power BI dataset refresh, every automated flow—through a single middleman called the Gateway. If that middleman’s tuned like a budget rental car, you get throttled performance no matter how shiny your Power Apps interface looks.

The Gateway is the bridge between the cloud and your on‑prem world. It takes cloud requests, authenticates them, encrypts the traffic, and executes queries against your local data sources. When it’s misconfigured, the entire Power Platform stack—Power BI, Power Automate, Power Apps—pays the price in latency, retry loops, and failed refresh sessions. It’s the bottleneck most administrators never optimize because, by default, Microsoft makes it “safe.” Safe means simple. Simple means slow.

By the end of this episode, you’ll know which settings are quietly strangling your throughput, why the defaults exist, and how to re‑engineer the connection flow so you can stop babysitting overnight refreshes like a nervous parent with a baby monitor.

As M365 turns into the integration glue of your data estate, the Gateway has become its weakest link—hidden, neglected, but critical. And, spoiler alert, the fix isn’t more hardware or another restart. It’s correcting two silent killers: default routing and default concurrency. One defines where your traffic travels; the other limits how much can travel simultaneously.

Keep those in mind, because they’re about to make you rethink everything you assumed about “working connections.”

Section 1 – The Misunderstood Middleman: What the Gateway Actually Does

Most people think the On‑Premises Data Gateway is a tunnel—cloud in, query out, job done. Incorrect. It’s closer to an airport customs checkpoint for data packets. Every request stepping off the Power Platform “plane” gets inspected, its credentials stamped, its luggage—your data query—scanned for permissions, then reissued with new boarding papers to reach your on‑prem SQL Server or file share. That process takes work: translation, encryption, compression, and sometimes caching. None of that is free.

Think of the cloud service—Power BI, Power Automate—as a delegate sending tasks to your local environment. The request hits the Gateway cluster first, which decides which host machine will process it. That host then manages authentication, opens a secure channel, queries the data source, and returns results back up the chain. The flow is: service → gateway cluster → gateway host → data source → return. Each arrow represents CPU cycles, memory allocations, and network hops. Treating the Gateway as a “dumb relay” is like assuming the translator at the United Nations just repeats words—no nuance, no context. In reality, it negotiates formats, encodings, and security protocols on the fly.

Microsoft gives you three flavors of this translator.

Standard Mode is the enterprise edition—the one you should be using. It supports clustering, load balancing, and shared use by multiple services.

Personal Mode is the single‑user toy version—fine for an analyst working alone but disastrous for shared environments because it ignores clustering completely.

And VNet Gateways run inside Azure Virtual Network subnets to avoid exposing on‑prem ports at all; they’re ideal when your data already lives partly in Azure. Mix these modes carelessly and you’ll create a diplomatic incident worthy of its own headline.

The Gateway also performs local caching. When consecutive refreshes hit the same data, that cache reduces roundtrips—but it means the Gateway devours memory faster than most admins expect. Add concurrency—the number of simultaneous queries—and you’ve just discovered why your CPU spikes exist. Encryption of every payload adds another layer of cost. All of this happens invisibly while users blame “Power BI slowness.”

So no, it’s not a straw. It’s a full‑blown processing engine squeezed into a small Windows service, juggling encryption keys, TLS handshakes, streaming buffers, and queued refreshes, all while the average user forgets it even exists. Picture it as the nervous bilingual courier translating for two impatient executives—Microsoft Cloud on one side, your SQL Server on the other—both yelling for instantaneous results while it flips encrypted note cards at lightning speed.

Now that you’ve finally met the real Gateway—not a tunnel, not a relay, but a translator under constant load—let’s face the uncomfortable truth: you’ve been choking it with the same factory settings Microsoft ships for minimal support calls. Time to open the hood and see just how those defaults quietly throttle your data velocity.

Section 2 – Default Settings: The Hidden Performance Killers

Here’s the blunt truth: Microsoft’s default Gateway configuration is designed for safety, not speed. It assumes your data traffic is a fragile toddler that must never stumble, even if it crawls at the pace of corporate approval workflows. Reliability is good—but when your Power BI refresh takes an hour instead of twelve minutes, you’ve traded stability for lethargy.

Start with concurrency. By default, the Gateway allows a pitiful number of simultaneous queries—usually one thread per data source per node. That sounds tidy until you remember each refresh triggers multiple queries. One Power BI dataset with half a dozen tables means serial execution; everything lines up politely, waiting for a turn like British commuters at a bus stop. You, meanwhile, watch dashboards updating in slow motion. Increasing concurrent queries lets the Gateway chew through multiple requests in parallel—but, of course, that eats CPU and RAM. Balance matters; starving it of resources while raising concurrency is like telling one employee to do five people’s jobs faster.

Then there’s buffer sizing, the forgotten setting that dictates how much data the Gateway can handle in memory before it spills to disk. The default assumes tiny payloads—useful when reports were a few megabytes, disastrous when they’re gigabytes of transactional detail. Once buffers overflow, the Gateway starts paging data to disk. If that disk isn’t SSD‑based, congratulations: you just introduced mechanical delays measurable in geological time. Expand the buffer within reason; let RAM handle what it’s good at—short‑term blitz processing.

A micro‑story to prove the point.

An analyst once bragged that his model refreshed in twelve minutes. After a routine Gateway update, refresh time ballooned to sixty minutes. Same data, same hardware. The culprit? Update reset the concurrency limit and buffer parameters to defaults. Essentially, the Gateway reverted to “training wheels” mode. A two‑line configuration fix restored it to twelve minutes. Moral: never assume updates preserve your tweaks; Microsoft’s setup wizard has a secret fetish for amnesia.

Next villain: antivirus interference. The Gateway’s constantly reading and writing encrypted temp files, logs, and streaming chunks. An over‑eager antivirus scans every read‑write operation, throttling I/O so badly you might as well be running it on floppy disks. Exclude the Gateway’s installation and data directories from real‑time scanning. You’re protecting code signed by Microsoft, not a suspicious USB stick from accounting.

Now, CPU and memory correlation. Think of CPU as the Gateway’s mouth and RAM as its lungs. Crank concurrency or enable streaming without scaling resources, and you give it the lung capacity of a hamster expected to sing an opera. Refreshes extend, throttling kicks in, and you call it “cloud latency.” Wrong diagnosis. The host’s overwhelmed. Watch the performance counters—you’ll see the saw‑tooth patterns of queued queries wheezing for resources.

Speaking of streaming: there’s a deceptive little toggle named StreamBeforeRequestCompletes. Enabled, it starts shipping rows to the cloud before an entire query finishes. On low‑latency networks, it feels magical—data begins arriving sooner, reports render faster. But stretch that same configuration across a weak VPN or high‑latency WAN, and it collapses spectacularly. Streaming multiplies open connections, fragile paths desynchronize, and half‑completed transfers trigger retry storms. Use it only inside stable, high‑bandwidth networks; disable it when reaching through wobbly tunnels.

And about those tunnels—your network path itself may pretend to be healthy while sabotaging performance. Many admins route outbound Gateway traffic through corporate VPNs or centralized proxies “for security.” Admirable intention, catastrophic result. You’re adding milliseconds of detour to every query hop while Microsoft’s own global network could have carried it directly from your office edge to Azure’s backbone. The Gateway status light will still say “Healthy” because it measures reachability, not efficiency. Don’t mistake a pulse for fitness.

The pattern here should now be obvious: every “safe” default sacrifices velocity for predictability. They’re fine for demos, not for production. The moment you exceed a handful of concurrent refreshes, they become a straitjacket.

So yes, fix your thread limits, expand your buffers, exclude the antivirus, and sanity‑check that network path. Because right now, you’ve built a Formula One data engine—and you’re forcing it to idle in first gear.

Next, we’ll examine why even perfect local tuning can’t save you if your data’s taking the scenic route through the public internet instead of Microsoft’s freeway.

Section 3 – The Network Factor: Routing, Latency, and Cold Potato Myths

Your Gateway might be tuned like a race car now, but if the track it’s driving on is a dirt road, you’re still going to eat dust. Performance doesn’t stop at the server rack—it keeps traveling through your network cables, firewalls, and routers before it ever reaches Microsoft’s global backbone. And here’s where most admins commit the ultimate sin: forcing Power Platform traffic through corporate VPNs and centralized proxies as if data integrity were best achieved by torture.

Let’s start with a quick reality check. Microsoft’s cloud operates on a cold potato routing model. In simple terms, whenever your data leaves your building and reaches the nearest edge of Microsoft’s network—called a POP, or Point of Presence—Microsoft keeps that data on its private fiber backbone for as long as possible. That global network spans continents with redundant peering links and more than a hundred edge sites; once traffic enters, latency drops dramatically because the rest of its journey rides on optimized fiber instead of the open internet’s spaghetti mess. Compare that to “hot potato routing,” where traffic leaves your ISP’s network almost immediately, bouncing from one third‑party carrier to another before it ever touches Microsoft’s infrastructure. Cold potato equals less friction. Hot potato equals digital ping‑pong.

And yet, many organizations sabotage this advantage. They insist on routing Power Platform and M365 traffic back through headquarters—over VPN tunnels or web proxies—before letting it out to the internet. Why? Security theater. Everything feels “controlled,” even though you’ve just added several unnecessary network hops plus packet inspection delays from devices that were never built for high‑volume TLS traffic. Each hop adds 10, 20, maybe 30 milliseconds. Add four hops and you’ve doubled your latency before the query even sees Azure.

The truth is Microsoft’s network is more secure—and vastly faster—than your overworked firewall cluster. You paid for that performance as part of your license, then turned it off out of an outdated security habit. Stop doing that.

Now, visualize how connectivity works when done properly. You open a Power BI dashboard in your branch office. The cloud service in Azure sends a request to the Gateway. That request exits your office through the local ISP line, hits the nearest Microsoft edge POP—say, in Frankfurt or Dallas depending on geography—and then rides Microsoft’s internal network right into the Azure region hosting your tenant. No detours. No VPN loops. Just: Office → Edge POP → Microsoft Backbone → Azure Region. That is the low‑latency highway your packets dream about every night.

So where does “routing preference” come in? Azure gives you options on how outbound traffic is delivered. The Microsoft Network routing preference keeps your data on that private backbone until the last possible moment—cold potato style. The Internet option does the opposite; it tosses your packets onto the open internet right away to save on bandwidth costs. You can even split the difference using combination mode, where the same resource—like a storage account—offers two endpoints, one carried through Microsoft’s backbone, the other through general internet routing. Smart teams test both and choose based on workload sensitivity. Analytical traffic? Use Microsoft Network. Bulk uploads or nightly logs? Internet option is adequate.

If you get this wrong, everything above—concurrency, buffers, hardware—becomes irrelevant. The Gateway can’t process data it hasn’t received yet. Latency is compound interest in reverse: every additional millisecond on the line lowers your throughput exponentially. So even if your refresh appears “healthy,” you may be losing half your real performance to congestion that your diagnostics never show.

Here’s where Microsoft’s thousand engineers have already done the hard work for you. Their global network interlinks over 60 Azure regions, with encryption baked in at Layer 2 and more than 190 edge POPs positioned to keep every enterprise within roughly 25 milliseconds of the network. You could never replicate that with your private MPLS or VPN backbone. When you correctly permit Power Platform traffic to egress locally and ride that backbone, you’ll cut end‑to‑end latency by up to 50 percent. Yes, half. The paradox is that “less control” over routing actually produces more security and predictability because you’re inside a network engineered for failover and telemetry rather than a generic corporate pipe.

Think of it like building a bridge. You could let your data swim through the public internet’s unpredictable currents—cheap, yes, but slow and occasionally shark‑infested—or you could let Microsoft’s freeway carry it over the water on reinforced concrete. The freeway already exists. Your only job is to drive on it instead of taking the raft.

Of course, fixing the path only solves half the problem. A perfectly paved road doesn’t matter if your driver—meaning the Gateway host itself—is still underpowered, coughing smoke, and trying to haul ten tons of analytical data with one gigabyte of RAM. So next, let’s build a real vehicle worthy of that freeway.

Section 4 – Hardware and Hosting: Build a Real Gateway Host

Let’s start by dismantling a myth: the On‑Premises Data Gateway is not some elastic Azure service that auto‑scales just because you upgrade your license. It’s a Windows service chained to the physical reality of the machine it’s running on. Give it lightweight hardware, and it will perform like one. Give it compute muscle, and suddenly your refreshes stop wheezing.

Minimum specs? Microsoft lists eight GB of RAM and a modest quad‑core CPU. Those numbers exist purely to keep support calls civil. Real‑world production? You want at least sixteen gigabytes of RAM and as many dedicated physical cores as your budget permits—eight cores should be your starting point, not the finish line. Remember, every concurrent query consumes a thread and a share of memory; multiple refreshes compound that load. Starve it of resources, and the scheduler queues everything like a slow cafeteria line. Feed it, and you unlock genuine parallelism.

Storage matters too. The Gateway caches data, logs, and temp files incessantly. If those land on a mechanical disk, you’ve just equipped a race car with bicycle tires. Move logs and cache to an SSD or NVMe drive; latency from disk operations drops from milliseconds to microseconds. The effect shows up instantly in refresh duration graphs. I’ve seen hour‑long refreshes shrink to twenty minutes because someone swapped the hard drive.

Next: virtual machines versus physical hosts. VMs work—but only when they’re treated like reserved citizens, not tenants in an overcrowded apartment. Dedicate CPU sockets, lock memory allocations, and disable overcommit. Shared infrastructure steals cycles the Gateway needs for encryption and query parallelism. Cloud admins often mistakenly host the Gateway on a general‑purpose utility VM. Then they wonder why performance fluctuates like mood lighting. If you insist on virtualization, use fixed resources. If not, go physical and spare yourself the throttling.

Now, if one machine runs well, several run better. That brings us to clusters. A Gateway cluster is two or more host machines registered under the same Gateway name. The Power Platform automatically load‑balances across them, distributing queries based on availability. This isn’t high availability through magic—each node still needs the same version and configuration—but it’s simple redundancy that doubles or triples throughput while insulating against patch‑night disasters. Think of clustering as giving the Gateway a relay team instead of one exhausted runner.

To know whether your host is sufficient, stop guessing and start monitoring. Microsoft ships a Gateway Performance template for Power BI—it visualizes CPU usage, memory pressure, query duration, and concurrent connections. Use it. If you see CPU pinned above 80 percent or memory saturating as refreshes start, you’ve confirmed an under‑powered host. Complement that with Windows Performance Monitor counters: Processor % Processor Time, Memory Available MBytes, and the GatewayService’s private bytes. Watch for patterns; if metrics climb predictably during scheduled refreshes, you’ve maxed capacity.

Also enable enhanced logging. Newer builds include per‑query timestamps so you can trace slow segments of the refresh pipeline. You’ll often find that apparent “network latency” is actually the host spilling buffers to disk—clear evidence of inadequate RAM. Logs don’t lie; they just require someone competent enough to read them.

One final reminder: hardware tuning and monitoring are not optional chores—they are infrastructure hygiene. You patch Windows, you update firmware, you watch event logs. The Gateway deserves the same discipline. Ignore it and you’ll spend nights performing ritual service restarts and blaming invisible ghosts.

Because even flawless network routing can’t redeem a Gateway host built on undersized hardware with forgotten logs, outdated drivers, and shared resources. The cloud’s backbone may be a superhighway—but if your vehicle runs on bald tires and missing spark plugs, you’re stuck in the breakdown lane.

Next up: why staying fast requires staying vigilant—version control, maintenance schedules, and automation that keeps your Gateway healthy before it begs for resuscitation.

Section 5 – Proactive Optimization and Maintenance

Let’s talk about the part admins treat like flossing—maintenance. They know they should, but somehow forget until everything starts rotting. The On‑Premises Data Gateway isn’t a “set‑and‑forget” component; it’s living software, and like any living thing it decays when neglected.

First rule: never auto‑apply updates. I know, shocking advice from someone defending Microsoft technology, but hear me out. Each release may contain performance improvements—or new, undiscovered side effects. Automatic updates replace stable binaries and occasionally reset critical configuration files to that hated “safe default” mode. Stage new versions in a sandbox first. Spin up a secondary Gateway instance, clone your configuration, then schedule a test refresh cycle. If throughput and log consistency hold steady, promote that version to production. If not, roll back gracefully while the rest of the world panics on the community forum.

That brings us to rollback hygiene. Keep local backups of your configuration files: GatewayClusterSettings.json and GatewayDataSource.xml. Copy them before each upgrade. The Gateway doesn’t ask politely before overwriting them. Version‑pinning the installer—the exact MSI build number—is your insurance policy. Microsoft’s download archive lists previous builds for a reason. Think of it like keeping old driver versions; sometimes stability is worth a week’s delay on flashy new features.

Now, onto continuous monitoring. You wouldn’t drive a performance car without a dashboard; stop running your gateway blind. Every week, open the Power BI Gateway Performance report and correlate CPU, memory, and query‑duration spikes with scheduled refresh jobs. Patterns reveal inefficiencies. Perhaps your Monday‑morning sales refresh collides with finance’s dataflow run. Adjust the Power Automate triggers, spread the load. You’ll witness the bell curve flatten and the cries of “Power BI is slow” mysteriously vanish.

Don’t just stare at charts—act on them. Automate health checks with PowerShell. Microsoft’s Get‑OnPremisesDataGatewayStatus cmdlet will query connectivity, cluster state, and update level. Wrap it in a scheduled script that emails a summary before business hours: CPU average, queued requests, and last refresh status. If metrics exceed thresholds, restart the gateway service proactively. Yes, I said restart before users complain. Preventive rebooting flushes stale network handles and clears temporary file bloat. A ten‑second interruption beats a two‑hour outage.

Let’s discuss token management. In older builds, long‑running refreshes occasionally failed because authentication tokens expired mid‑query. Recent versions handle token renewal asynchronously—another reason to upgrade deliberately, not impulsively. Re‑registering your data sources after major updates ensures the token handshake process uses the latest schema. Translation: fewer silent 403 errors at 3 a.m.

The next discipline is environmental awareness. The Gateway host sits at the intersection of firewall rules, OS patching, and enterprise security software. Each of those layers can introduce latency or incompatibility. Maintain a documented “baseline configuration”: Windows version, .NET framework build, antivirus exclusions list, and network ports open. When something slows, compare the current state to that baseline. ninety percent of performance losses trace back to a quietly re‑enabled security setting or a background agent that matured into a resource hog overnight.

Human behavior, however, is the biggest bottleneck. Too many admins treat the gateway as an afterthought until executives complain about late dashboards. Reverse that order. Schedule quarterly maintenance windows specifically for gateway tuning. Review the logs, validate capacity, test failover between clustered nodes, and—critically—document what you changed. The next administrator should be able to follow your breadcrumbs rather than reinvent the disaster.

For teams obsessed with automation, integrate the gateway lifecycle into DevOps. Store configuration files in version control, script deployment with PowerShell or Desired State Configuration, and you’ll transform a fragile Windows service into code‑defined infrastructure. The benefit isn’t geek prestige—it’s repeatability. When a machine fails, you rebuild it identically rather than “approximately.”

And if you need motivation, quantify the outcome. Faster refresh cycles mean executives base decisions on data that’s hours old, not yesterday’s export. A one‑hour gain in refresh time translates directly into more responsive Power Apps and fewer retried Power Automate flows. Multiply that by hundreds of daily users and you realize the business impact dwarfs the cost of a decently specced host.

So yes, the Gateway isn’t broken—you simply never treated it like infrastructure. It deserves patch cycles, performance audits, and automation scripts, not post‑failure therapy sessions. Maintain it, and you’ll stop chasing ghosts in the middle of the night. Ignore it, and those ghosts will invoice you in downtime.

Conclusion – The Takeaway

Let’s distill this into one uncomfortable truth: the On‑Premises Data Gateway is infrastructure, not middleware. It’s the plumbing between your on‑premises data and Microsoft’s cloud, and plumbing obeys physics. Defaults are “safe,” but safety trades away performance.

You wouldn’t run SQL Server on its out‑of‑box power plan and expect lightning results. Yet people install a Gateway, click Next five times, and wonder why their refreshes crawl. The answer hasn’t changed after two decades of computing: tune it, monitor it, scale it.

The playbook is simple.

Step one: stop assuming defaults are sacred—raise concurrency and buffer limits within the capacity of your host.

Step two: let Microsoft’s network handle the routing; disable that corporate VPN detour.

Step three: build a gateway host worthy of its workload—SSD storage, 16 GB RAM minimum, multiple cores, cluster redundancy.

Step four: treat updates and monitoring as continuous operations, not emergency measures.

Do those consistently and your “slow Power BI dataset” will transform into something almost unrecognizable—efficient, predictable, maybe even boring. Which is the highest compliment infrastructure can earn.

Your Power BI isn’t slow. Your negligence is.

So before closing this video, test your latency to the nearest Microsoft edge POP, open your Gateway Performance report, and schedule that PowerShell health check. Then watch the next episode on routing optimization across the M365 ecosystem—because the true hybrid data backbone isn’t built by luck; it’s engineered.

Entropy wins when you do nothing. Subscribing fixes that. Press Follow, enable notifications, and let structured knowledge arrive on schedule. Maintain your curiosity the same way you maintain your Gateway—regularly, intentionally, and before it breaks.