Introduction: The Cloud Migration Warning

Stop. Put down your migration roadmap and close the Azure portal—because you’re about to make a mistake that will haunt your AI plans for the next decade. You’re migrating to the cloud as if it’s 2015, but expecting it to deliver 2025’s AI miracles. That is not strategy. That’s nostalgia dressed as progress.

Here’s the uncomfortable truth: most organizations brag about being “cloud first,” but few are even AI capable. They moved their servers, their databases, and their applications to Azure, AWS, or Google Cloud—and called that transformation. The problem? AI doesn’t care that your virtual machines are in someone else’s data center. It cares about your data structure, your security posture, and your governance model.

Think of it like moving boxes from your old house to a shiny, modern condo. If you dump everything—broken furniture, expired canned beans, old tax receipts—into the new space, you didn’t transform; you just changed the location of your mess. That’s what most cloud migrations look like right now: operationally expensive, beautifully marketed piles of technical debt.

And the cruel irony? Those same migrations were sold as “future-proof.” Spoiler: the future proof didn’t include AI. Everything from your access controls to your compliance framework was built for static workloads and predictable data. AI needs fluid, governed, interconnected, and traceable data pipelines.

So, if you’re mid-migration or just celebrated your “lift-and-shift” anniversary—congratulations, you now own an architecture that’s cloud-ready and AI-hostile. But you can fix it, if you understand where the trap begins.

The Cloud Migration Trap: Why Lift-and-Shift Fails AI

The trap is psychological and architectural at once. You believe that “cloud equals modern.” It doesn’t. Moving workloads without modernizing your data, governance, and security means you’ve rebuilt the Titanic—beautifully stable until it hits an AI-shaped iceberg.

Lift-and-shift was designed for one purpose: speed. It minimized disruption by moving virtual machines to virtualized environments. That’s fine when your priority is shutting down datacenters to save on cooling bills. But AI isn’t interested in your HVAC efficiency; it depends on clean, structured, and accessible data governed by clear policies.

When you lift and shift, you preserve every bad habit your infrastructure ever had. Old directory structures, fragmented identity management, inconsistent tagging, legacy dependencies—all migrate with you. Then you add AI and expect it to reason across data silos that your own admins can barely navigate. The model can’t see the connections because your systems never documented them.

Security? Worse. Traditional migrations often replicate permissions and policies as-is. It feels safe because nothing breaks on day one. But those inherited permissions become a nightmare under AI workloads. Copilot and GPT-based systems access data contextually, not transactionally. So, one badly scoped Azure role or shared key can expose confidential training material faster than any human breach. You wanted scalability; what you actually deployed was massive-scale risk.

And governance—let’s just say it didn’t migrate with you. Lift-and-shift assumes human oversight remains constant, but AI multiplies the rate of data creation, consumption, and recombination. Your old compliance scripts can’t keep up. They weren’t written to trace how a language model inferred customer patterns or which pipeline fed it sensitive tokens. Without unified governance, every AI output is potentially a compliance incident.

Now enter cost. Ironically, lift-and-shift is advertised as cheap. But when AI projects arrive, you realize your cloud bills explode. Why? Because every unoptimized workload and fragmented data store adds friction to AI orchestration. Instead of a unified data fabric, you’re paying for a scattered archive—and you can’t scale intelligence on clutter.

Microsoft’s own AI readiness assessments show that AI ROI depends on modern governance, consistent data integration, and security telemetry—not just compute horsepower. Which means your AI readiness isn’t decided by your GPU quota; it’s decided by whether your migration aligned with Foundry principles: unified resources, shared responsibility, and managed identity by design.

So yes, lift-and-shift gets you to the cloud fast. But it also locks you out of the AI economy unless you rebuild the layers beneath—your data, your permissions, your compliance frameworks. Without that foundation, “AI readiness” remains a PowerPoint fantasy.

You migrated your servers; now you need to migrate your mindset. Otherwise, your next-gen cloud might as well be a digital warehouse: full of stuff, beautifully maintained, and utterly unusable for the future you claim to be preparing for.

Pillar 1: Data Readiness – The Foundation of AI

Let’s start where every AI initiative pretends it already started: with data. Because the hard truth is that your data isn’t ready for AI, and deep down you already know it.

Organizations keep talking about “AI transformation” as if it’s something they can enable with a new license key. Yet behind the scenes, most data still exists in silos guarded by compliance scripts written before anyone knew what a large language model was. AI projects don’t fail because models are bad—they fail because the data feeding them is inconsistent, inaccessible, and undocumented.

Think of your organization’s data like plumbing. For years, you’ve been patching new pipes onto old ones—marketing CRM here, HR spreadsheets there, a slightly haunted SharePoint site that hasn’t been cleaned since 2014. It technically works. Water flows. But AI doesn’t want “technically works.” It demands pressure-tested pipelines with filters, valves, and consistent flow. The moment you connect Copilot, those leaks become floods, and those rusted pipes start contaminating every prediction.

So, what does “data readiness” actually mean? Three things: structure, lineage, and governance. Structure means data that’s normalized and retrievable by systems that aren’t ancient. Lineage means you know exactly where that data came from, how it was transformed, and what policies apply to it. Governance means there’s a consistent way to authorize, audit, and restrict usage—automatically. Anything short of that, and your AI outputs will be statistical hallucinations disguised as insight.

Azure Fabric exists for that reason—it’s Microsoft’s attempt to replace a tangle of disparate analytics tools with a unified data substrate. But here’s the catch: Fabric can’t fix logic it doesn’t understand. If your migration merely copied old warehouses and dumped them into Data Lake Gen2, then Fabric is simply cataloguing chaos. The act of migration did nothing to align your schema, duplicate reduction, or metadata tagging.

You can’t say you’re building AI capability while tolerating inconsistent tagging across resource groups or allowing shadow data stores to exist “temporarily” for three fiscal years. AI readiness begins with a ruthless data inventory—identifying redundant assets, consolidating versions, and applying governance templates that map to your compliance standards.

Look at the pattern from Microsoft’s own AI readiness research: companies that succeed with AI define data classification policies before training models. Those that fail treat classification as paperwork after deployment. It’s like running an experiment without recording which chemicals you used—you might get fireworks, but you’ll never reproduce them safely.

Here’s where it gets darker. Inconsistent data governance is not just inefficient; it’s legally volatile. LLMs remember patterns—if confidential client information accidentally enters a training corpus, you have a compliance breach with a neural memory. There’s no “undo” for that. Azure’s multi-layered security stack, from Defender for Cloud to Key Vault, exists to enforce confidentiality boundaries, but only if you actually use it. Copying your old security groups into the cloud without revalidating access chains means you’re inviting the model to peek into places no human auditor could justify.

And the final insult? Storage is cheap, but ignorance isn’t. Every unmanaged dataset increases the attack surface. Every unclassified file adds uncertainty to your AI compliance reports. You can deploy as many CoPilots as you like—if each department’s data policy contradicts the next, your AI is effectively bilingual in nonsense.

The simplest test: if you can’t trace the origin, transformation, and access control of your top ten datasets in under an hour, you are not AI ready, no matter how glossy your Azure dashboard looks.

True data readiness means adopting continuous governance—rules that travel with the data, enforced through Fabric and Purview integration. Every time a user moves or modifies data, those policies must follow automatically. That’s not a luxury; it’s the baseline for AI ethics, privacy, and reproducibility.

In the AI era, data isn’t just an asset—it’s the bloodstream of the entire operation. Migration moved the body; now you need to clean the blood. Because if your data has impurities, your AI decisions have consequences—at scale, instantly, and irreversibly.

Pillar 2: Infrastructure and MLOps Maturity

Now, even if your data were pristine, you’d still fail without the muscle to process it intelligently. That’s where infrastructure and MLOps come in—the skeleton and nervous system of AI readiness.

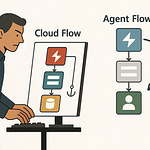

Lifting workloads to virtual machines is the toddler phase of cloud evolution. Mature organizations don’t migrate applications; they migrate control. Specifically, they transition from static environments to orchestrated, policy-driven platforms that understand context, dependencies, and performance in real time. Azure AI Foundry embodies that shift—a unified environment where compute, data, and governance live together instead of playing long-distance relationship over APIs. But Foundry doesn’t forgive poor infrastructure hygiene.

Ask yourself: how many of your AI experiments still depend on manual deployment scripts, custom Docker files, or human-triggered approvals? That’s charming until you want scalability. Modern MLOps maturity means reproducible pipelines that define metrics, datasets, and version control as code. No more “Oops, we lost the model” moments because Jenkins ate the artifact. Foundry and Azure Machine Learning now support full lifecycle tracking—if you use them properly.

The key word being “properly.” Most teams treat MLOps as an add-on, not a cultural discipline. They automate training runs but still rely on manual compliance checks. They track accuracy but ignore model lineage. AI readiness lives or dies on traceability. You need to know which dataset trained which model under which conditions, and you need that proof automatically generated, not via an intern’s spreadsheet.

Infrastructure maturity also means understanding cost versus capability. Everyone loves GPUs—until the bill arrives. The trick isn’t throwing more compute at AI; it’s coordinating intelligent resource scaling with security and governance baked in. Azure Arc and Defender for Cloud allow exactly that—hybrid observability with centralized control. But immature migrations treat Arc like a side quest, not a control plane.

Let’s differentiate: infrastructure is hardware allocation; MLOps is behavioral governance of that hardware. One without the other is like giving a toddler car keys. You may have the power, but you lack workflow discipline. Mature ecosystems treat every deployment like a compliance artifact—auditable, reversible, explainable.

Remember the Foundry prerequisites: regional alignment, unified identity, and endpoint authentication. If your team can’t confidently state which region each dataset and model resides in, congratulations—you’ve built an AI compliance time bomb. And if you’re still using connection strings older than your interns, you’ve already fallen behind the May 2025 migration cutoff.

On-premise nostalgia is the enemy here. The future runs on infrastructure that treats compute as ephemeral—containers spun up, used, and terminated automatically with policy enforcement. Human-configured machines are liabilities; coded deployments are guarantees. That’s the delta between experimental AI and production AI.

And this is where infrastructure meets psychology again: you can’t secure what you don’t orchestrate. Governance frameworks like NIST’s AI RMF and ISO 42001 assume your infrastructure tracks model provenance and risk classification by default. If your system architecture can’t produce that metadata on demand, no audit will save you.

The irony? Cloud was sold as freedom. True AI readiness turns it into accountability. A mature MLOps setup doesn’t just train faster—it testifies, logs, and justifies every result. It becomes your alibi when regulators or executives ask, “Where did this decision come from?”

So yes, infrastructure and MLOps are not glamorous. They’re the scaffolding you build before you hang the AI art on the wall. But unlike art, this needs precision. Without orchestrated infrastructure, your AI strategy remains theoretical. With it, every model, every experiment, and every pipeline becomes traceable, secure, and scalable.

That’s what makes you not just cloud migrated—but genuinely, provably AI ready.

Pillar 3: The Talent and Governance Gap

Now let’s discuss the most dangerous illusion of modernization—the belief that tooling compensates for competence. It doesn’t. You can subscribe to every Azure service known to humankind and still fail because your people and governance processes are calibrated for a pre‑AI century.

Here’s the paradox: everyone wants AI, but no one wants to retrain staff to manage it responsibly. Migration programs often focus on infrastructure diagrams, not organizational diagrams. Yet it’s the humans, not the hardware, who enforce or violate governance boundaries. If your cloud team doesn’t understand data classification, identity inheritance, or model‑level security, you’ve simply automated confusion at scale.

Think of governance as choreography. Before AI, you could improvise—a developer could spin up a database, extract some tables, and no one noticed. In an AI environment, every undocumented decision becomes a policy violation in waiting. Who trains the model? Who validates the dataset lineage? Who approves the prompt templates feeding Copilot? If the answer to all three is “the same guy who wrote the PowerShell script,” then congratulations—you’ve institutionalized risk.

The talent gap isn’t just missing data scientists; it’s missing governance technologists—people who understand how AI interacts with policy frameworks like ISO 42001 or NIST’s AI RMF. Right now, most enterprises treat those as PowerPoint disclaimers, not daily practice. The result? Compliance theater. They write “Responsible AI” guidelines, then hand model tuning to interns because “the Azure portal makes it easy.” Spoiler: the portal doesn’t make ethics easy; it just masks how complex it truly is.

Microsoft’s research into AI readiness lists “AI governance and security” as a principal pillar, not because it’s fashionable, but because it’s the institutional spine. Yet organizations keep confusing security with secrecy. Locking data down isn’t governance. Governance is structured transparency: knowing who touched what, when, and whether they had the right to. If your audit trail can’t prove that without forensic excavation, your governance exists only on paper.

So how do you close the gap? First, map talent to accountability, not titles. The database admin becomes a data custodian. The network engineer becomes an identity steward. The compliance officer evolves into an AI risk auditor who understands model provenance, not just password policy. Azure Purview, Fabric, and Foundry can surface this metadata automatically—but someone must interpret it, challenge anomalies, and refine policy templates continuously.

Second, dissolve the imaginary wall between IT and legal. AI governance isn’t a compliance afterthought; it’s an engineering parameter. When data residency laws change, your pipelines must adapt in code, not memos. Organizations that succeed at AI readiness build governance as code—policy enforcement baked into CI/CD pipelines, triggering alerts when a dataset crosses classification boundaries. That demands staff who can read YAML and regulation interchangeably.

Finally, institute continuous education. Azure evolves monthly; your employees’ understanding evolves yearly, if ever. Treat skilling as part of your security posture. If your architects don’t know the difference between Azure AI Foundry’s endpoint authentication and legacy connection strings, they’re one update away from breaking compliance. Train them, certify them, hold them accountable.

Because in the AI era, ignorance isn’t bliss—it’s negligence. Governance automation without human intelligence is just bureaucracy accelerated. And that, ironically, is the fastest way to fail “AI readiness” while proudly announcing you’ve completed migration.

Case Study: The Cost of Premature Cloud Adoption

Let’s test all of this with a real‑world scenario—fictionalized, but depressingly common.

A mid‑size financial services firm—let’s call it Fintrax—undertook a heroic “Cloud First” initiative. The CIO promised shareholders lower costs and faster innovation. They migrated hundreds of workloads to Azure within twelve months. Virtual machines replicated perfectly, databases spun up, dashboards glowed green. Success, according to the PowerPoint.

Then the board requested an AI pilot using Copilot and Azure OpenAI to analyze client interactions. That’s when success unraveled.

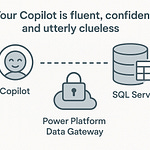

The first problem: data sprawl. Marketing data lived in Blob Storage, client files in SharePoint, transaction logs in SQL Managed Instance—all untagged, unclassified, and mutually oblivious. The AI model couldn’t retrieve consistent records; Fabric integration produced mismatched schemas. Developers manually merged tables, accidentally including personal identifiers. Now they had a compliance breach before the model even trained.

Next came security chaos. To accelerate migration, Fintrax had replicated on‑premises permissions one‑to‑one. Decades‑old Active Directory groups reappeared in the cloud with global reader access. When the Copilot instance ingested datasets, it followed those same permissions—meaning junior interns could technically prompt the model for sensitive financial summaries. Defender for Cloud flagged it precisely one week after a regulator did.

Then the governance vacuum became obvious. No one knew who owned AI risk approvals. Legal demanded documentation for data lineage; IT shrugged, claiming “it’s in the portal.” The portal, in fact, contained 14 disconnected resource groups with overlapping names like AI‑Test2‑Final‑Copy. The phrase “governance plan” referred to an Excel sheet saved in OneDrive with color‑coded rows—half in red, half in regret.

Each of these failures stemmed from the same root cause: migration treated as a destination instead of a capability. The company assumed that being in Azure automatically meant being secure and compliant. But Azure is a toolbox, not a babysitter. When the billing cycle revealed a 70% cost increase due to duplicated compute and unmanaged storage, the CFO labeled AI an “unnecessary experiment.”

Ironically, the technology worked fine—the organization didn’t. With proper data readiness, identity restructuring, and AI governance roles defined in code, Fintrax could have been a showcase for modern transformation. Instead, it became another cautionary slide in someone else’s keynote.

The lesson is painfully simple: migrating fast might win headlines, but migrating smart wins longevity. A cloud without governance is just someone else’s data center full of your liabilities. And until your people, policies, and pipelines operate as one intelligent system, the only thing your “AI‑ready architecture” will generate is excuses.

The 3‑Step AI‑Ready Cloud Strategy

So how do you escape this cycle of fashionable incompetence and actually achieve AI readiness? It’s not mysterious. You don’t need a moonshot team of “AI visionaries.” You need a disciplined, three‑step architecture strategy: unify, fortify, and automate.

Step one: Unify your data estate.

This is the architectural detox your migration skipped. Forget the vendor slogans; your priority is convergence. Every workload, every dataset, every process that feeds intelligence must exist within a governed, observable boundary. In Azure terms, that means integrating Fabric, Purview, and Defender for Cloud into one coherent nervous system—where classification, lineage, and threat monitoring happen simultaneously.

Unification starts with ruthless inventory. Identify shadow resources, forgotten storage accounts, orphaned subscriptions. Map them. If you can’t see them, you can’t protect them, and if you can’t protect them, you have no authority to deploy AI over them. Then consolidate data under a consistent schema and enforce metadata tagging through automation—not human whim. If each resource group uses distinct naming conventions, you’ve already fractured the genome of your digital organism.

Once your estate is visible and normalized, link telemetry sources. Connect Microsoft Sentinel, Log Analytics, and Defender signals directly into your Fabric environment. That’s not over‑engineering; it’s coherence. AI thrives only when it can correlate behavior across data, identity, and infrastructure. Unification transforms the cloud from a collection of containers into an interpretable environment.

Step two: Fortify through governance‑as‑code.

Security policies written once in a SharePoint document accomplish nothing. Governance must compile. In Azure, this means expressing compliance obligations as deployable templates—Blueprints, Policies, ARM scripts, Bicep definitions—that enforce classification and residency automatically. For instance, data labeled “Confidential‑EU” should never cross regions. Ever. The system, not an analyst, should prevent that.

You can implement this today using Azure Policy with aliases mapped to Purview tags, connected to Defender for Cloud posture management. Combine that with identity re‑architecture—Managed Identities, Conditional Access, Privileged Identity Management—to ensure AI systems inherit principle‑of‑least‑privilege by design, not by accident.

Human audits still matter, but humans become reviewers of events, not gatekeepers of execution. That’s the paradigm shift: codified trust. Your governance documents become executable artifacts tested in pipelines just like software. When regulators arrive, you don’t share PowerPoint slides—you run a script that proves compliance in real time.

Fortification also includes continuous validation. Integrate security assessments into your CI/CD flows so that any configuration drift or untagged resource triggers automated remediation. Think of it as DevSecOps extended to governance: every deployment checks adherence to legal, ethical, and operational constraints before it even reaches production. Only then is your cloud deserving of AI workloads.

Step three: Automate intelligence feedback.

Most organizations implement dashboards and call that “observability.” That’s like fitting smoke alarms and never testing them. AI readiness demands active intelligence loops—systems that learn about themselves.

Construct an AI governance model that gathers operational telemetry, classifies anomalies, and adjusts policies dynamically. Azure Monitor and Fabric’s Real‑Time Analytics can feed this continuous learning loop. If a model suddenly consumes anomalous volumes of sensitive data, the system should alert Defender and automatically throttle access until reviewed.

Automation is not about convenience; it’s about survivability. AI operates at machine speed. Human review will always lag unless governance scales equally fast. Automating policy enforcement, cost optimization, and anomaly detection converts your architecture from reactive to adaptive. That, incidentally, is the same operational model underlying Microsoft’s own AI Foundry.

Together, unification, fortification, and automation rebuild your cloud into an environment AI trusts. Everything else—frameworks, roadmaps, skilling programs—should orbit these three principles. Without them, you’re simply modernizing your chaos. With them, you start architecting intelligence intentionally rather than accidentally.

And remember, this isn’t optional evangelism. The AI Controls Matrix released by the Cloud Security Alliance maps 243 controls; more than half depend on integrated governance, automated monitoring, and unified identity. You can’t check those boxes after deployment; they are the deployment.

So, if you want a formula worth engraving on your data‑center wall:

Visibility + Verification + Velocity = AI Readiness.

Visibility through unification, verification through governance‑as‑code, velocity through automation. Three steps—performed relentlessly—and you’ll transform cloud migration from a logistical exercise into an evolutionary jump.

Conclusion: Stop Migrating, Start Architecting

Here’s the bottom line—migration is a logistics project; architecture is a strategic act.

If your cloud strategy still reads like a relocation plan, you’ve already lost a decade. AI will not reward the fastest movers; it will reward the most coherent builders. Cloud migration used to be about reducing friction—closing datacenters, saving money, consolidating servers. AI readiness is about increasing precision—tightening control, enriching data lineage, removing ambiguity. Those are opposites.

So stop migrating for its own sake. Stop treating workload counts as progress reports. The success metric has changed from “percentage of servers moved” to “percentage of decisions we can trace and defend.”

Start architecting: build intentional topology, governed unions between data and policy, automation loops that watch themselves. Treat tools like Azure Fabric and AI Foundry not as services but as the regulatory nervous system of your entire enterprise. Start writing your compliance in code, your access controls as logic, your governance as continuous validation pipelines.

Your next audit should look less like paperwork and more like compilation output: * errors, warnings, all models explainable.*

And if that sounds like overkill, remember what happens when you don’t. You end up with cloud sprawl, budget hemorrhage, and AI programs locked in quarantine because nobody can prove what data trained them. Modernization without discipline is merely digital hoarding.

The irony is that the technology to fix this already sits in your subscription. Azure’s multi‑layered security, Purview governance, Fabric integration—each a puzzle piece waiting for an architect, not a tourist. The question is whether you have the will to assemble them before your competitors do.

So, shut down the migration dashboard. Open your architecture diagram. And start redrafting it like you’re building the foundation for a planetary AI network—because, in effect, you are.

Your systems shouldn’t just run in the cloud; they should reason with it. Courtesy of actual design, not happy accidents. Stop migrating. Start architecting. That’s how you become not just “cloud ready” but AI inevitable.