The evolution of Copilot has been rapid, transforming from a nascent idea into a pivotal tool within Microsoft 365. This AI-powered assistant streamlines workflows by automating tasks, generating content, and offering intelligent suggestions, significantly boosting productivity. In fact, AI tools like Copilot have been shown to increase worker productivity by 66%. This blog delves into the journey of Copilot’s development, addresses current challenges for Microsoft 365 Copilot, and outlines its future trajectory. We’ll explore how Microsoft engineered Copilot for Microsoft 365, discuss its present limitations, and reveal upcoming enhancements for this powerful Microsoft 365 Copilot AI.

Key Takeaways

Copilot is an AI helper. It works with Microsoft 365 apps. It helps people do tasks faster. It makes content and gives smart ideas.

Copilot has grown a lot. It started as an idea. Now it works in many Microsoft apps. It helps users save time and work better.

Copilot faces challenges. These include keeping data safe. It also needs to be accurate. Users need training to use it well.

Microsoft plans to make Copilot better. It will be more personal. It will have new AI features. It will connect with more tools.

Microsoft wants Copilot to be a key AI tool. It will help companies. It will make work easier. It will keep getting smarter and safer.

How Copilot Changed Over Time

The First Idea

Microsoft first thought of Copilot. It was an advanced AI helper. They wanted it to be a full productivity partner. This idea was more than just doing tasks. They wanted a smart helper. It would understand what you needed. It would help you before you asked. This was for all your digital work. The goal was to use AI. It would make people better at their jobs. Hard tasks would become easy. Daily work would be faster. This idea started the big evolution of Copilot. It became a strong tool.

Early Features

The early evolution of Copilot joined key Microsoft 365 applications. These included Word, Excel, PowerPoint, Outlook, and Teams. In the beginning, Copilot helped with common tasks. For example, Copilot in Outlook could sum up long emails. It showed who spoke and what happened. In Teams, it gave meeting notes. This was for people who joined late. Or it gave full summaries of recorded talks. Users also liked Copilot‘s writing help. It made reports, emails, presentations, and spreadsheets. It used info from the Microsoft Graph or the internet. The Semantic Index for Copilot also came out. It mapped user and company data. This gave helpful answers. Also, Copilot in PowerPoint used DALL-E. This made custom pictures. It also had “Rewrite with Copilot.” This made text better. It also made slide titles.

Big Steps

Copilot‘s journey had many big steps. On February 7, 2023, Microsoft showed the new Bing. It had a chatbot. Soon after, on March 16, 2023, Microsoft launched Microsoft 365 Copilot. This was an AI helper just for Microsoft 365 applications. In May 2023, Copilot came to Windows 11. A big change happened on September 21, 2023. Bing Chat and Microsoft 365 Copilot became one. They were called Microsoft Copilot. Businesses could get it on November 1, 2023. This was a big step for Copilot for Microsoft 365. By November 15, 2023, all Windows 11 users had Microsoft Copilot. On December 12, 2023, it came to iOS and Android. Microsoft kept growing. It launched Copilot Pro. This was a paid plan. That was on January 15, 2024. In February 2024, Copilot was for small and medium businesses.

Current State of Microsoft 365 Copilot

Broadening Application Scope

Microsoft keeps making Copilot bigger. It works with more Microsoft 365 applications. This strong AI tool now joins many services. It goes beyond Word and Excel. Copilot now helps in OneNote and Loop. It also works with Microsoft Clipchamp and Whiteboard. SharePoint and OneDrive are included too. This wide reach means users get help. They get it in almost all their digital work. The goal is to make AI easy to use. It should be part of daily work. This makes all Microsoft tools better.

User Adoption and Benefits

Companies everywhere are seeing good things. They get more done with Microsoft 365 Copilot. Many businesses say employees save a lot of time. For example, Copilot users save 9 hours each month. Vodafone users saved four hours weekly. This shows how much AI helps. It makes things work better.

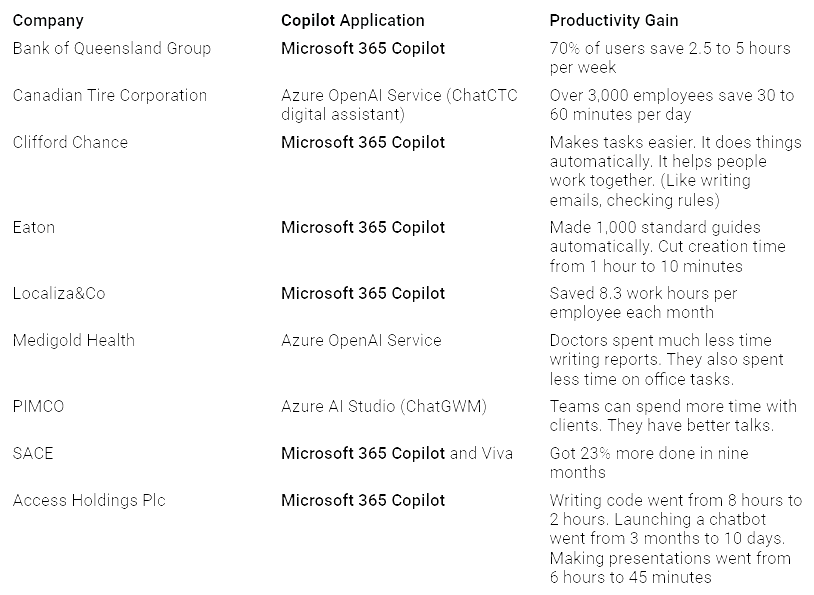

Here are some real examples of Copilot‘s help:

Core Capabilities Today

Copilot uses smart large language models. These understand and write text like humans. This means you can talk to it naturally. It works with Microsoft Graph. This helps it learn about you. It knows what you like. It knows your company’s info. This makes special content for you. It fits what you need. Copilot also uses good rules. These are for how content looks. They are also for how it is made. This makes sure content meets standards. It also matches company rules. It gives helpful examples. This makes creating things easier.

Copilot has strong skills. These are in many Microsoft 365 applications:

Word: Make, shorten, understand, fix, and improve papers. Turn text into tables. Rewrite text automatically. Make pictures. Summarize and find facts.

Excel: Get data ready. Find patterns. Make charts. Make hard tasks simple. This helps you make smart choices.

PowerPoint: Make presentations with pictures. Add notes for the speaker. Use sources from a prompt. Change content to other languages. Shorten long presentations. Add new slides. Make pictures.

Outlook: Summarize email chains. Keep up with your inbox. Find important emails. Write replies that fit. Make scheduling easy. Create meeting invites.

Teams: Review talks. Organize main points. Summarize key actions. Answer questions from chats. Answer questions from meetings or calls. Write messages.

Copilot also does data analysis automatically. It fills in repeated data. It uses patterns or old entries. It helps look at data trends. It makes reports. It gives charts and summaries when asked. Users can say what they need. Copilot will suggest or make formulas. In Excel, Copilot makes data analysis simple. It automates formulas. It finds insights. It looks at data trends. It creates charts. This makes things much faster.

Organizations face problems. They want to use Microsoft 365 Copilot. These problems include keeping data safe. They also need to manage what users expect. And they need to connect it to their tech. Fixing these things is key. It helps them use this strong AI tool well. It helps them get the most from it.

Data Security and Privacy

Keeping data safe is a big worry. This is for groups using Copilot. A main fear is that Copilot‘s results. They do not always keep labels. These labels are from original files. This can put private data at risk. A flaw (CVE-2024-38206) in Copilot Studio. It let people fake server requests. This could show private info. It was about internal cloud services. Also, Copilot-made papers. They might be shared too much. This shows private info.

A big worry comes up. Copilot uses all data. An employee can see it. Many workers can see private data. This is more than they should. This makes a big risk. Private info could be shown. This is through Copilot. If a user can see private info. Copilot gets the same access. This can make private info appear. It shows in its results. Studies show over 3% of business data. It is shared across groups. This is without proper care. This makes big data leaks possible. This is with Copilot. Security teams are very worried. 67% fear AI tools. They will show private info. Over 15% of key business files. They are at risk. This is due to too much sharing. Also, wrong access rights. And wrong labels. This shows big risks. It is with Copilot‘s access. It is to private data.

Other dangers include attacks. These can change Copilot‘s actions. Or they can take info out. Connection flaws also make new ways to attack. This is as Copilot links with Microsoft 365 services. Prompt injection attacks. They can trick Copilot. They make it search or steal data. Or trick users.

Microsoft uses ways to guard data. Microsoft 365 Copilot Chat sends calls. These are to large language models (LLM). They go to the closest data centers. It adds extra safety. This is for EU users. It follows the EU Data Boundary. It keeps EU traffic in the region. World traffic may go to the EU. It may go to other regions. This is for LLM work. Microsoft also hides data. It takes out personal info. It makes data points general. This stops people from being known. Data pseudonymization. It swaps real IDs for fake ones. Private data is locked. This is during storage and moving. Microsoft follows rules. These are like GDPR, CCPA, and HIPAA. Copilot for Microsoft 365 also keeps promises. These are about where data lives. It has extra safety for EU users. Access rules based on roles. They limit data access. Only authorized staff can see it. Sharing data with others. It only happens with user OK. Users can manage their info. They can delete past Copilot talks. Microsoft also has plans. These are for handling problems. They have user lessons. And ways to get feedback. Data minimization. It makes sure only needed data is taken. Purpose limitation. It means data is used only for its goal. User OK needs clear permission. The Recall feature on Copilot+ PCs. It locks and saves screen shots. They are kept on the computer. Users have full control.

Accuracy and Reliability

Copilot can sometimes make mistakes. This is like other AI models. It can give wrong info. This is called ‘hallucinations.’ Trusting these bad answers. It can lead to poor choices. It can hurt a company’s name. Or even cause legal trouble. For example, Copilot often gave wrong formulas. This was for a spreadsheet task. It used outside links. And wrong code. The user needed a formula. It was for the current sheet. Excel showed only a few items. This was in a filter list. Copilot gave basic, unhelpful tips. It did not find the real problem. It also did not get the meaning. This was for questions. It gave general answers. It used unrelated SharePoint files. This was when fixing an Excel problem.

Many things cause these errors. Copilot might use old data. Or incomplete data. Or wrong data. This is from inside and outside. Unclear user questions. They can make Copilot confused. It gives wrong answers. It may also not get complex things. This leads to simple or wrong answers. Info from unproven outside sources. It can also cause errors. Without regular checks. Copilot might keep giving bad answers. Microsoft‘s built-in limits. They are on data work. And task difficulty. They can also affect how right it is.

To check Copilot‘s answers. Microsoft uses auto tests. They check many results. It does safety and tone checks. This finds bad or unfair content. It makes sure the tone is right. Checks for other languages. They make sure it works well. This is for different languages. It uses native speakers. This is for accuracy. Regression testing. It runs old tests. This is on new models. It finds unexpected changes. Copilot also cleans up results. It filters bad content. This is from Azure OpenAI answers. Users can change code. Or descriptions. This is before they are final. Users also get lessons. They learn about Copilot‘s skills. And its limits. How to write good questions. How to check answers. This is using trusted sources. Verified answers. These are human-approved visual answers. They are saved in the semantic model. This ensures good answers.

User Skill Gap and Readiness

A big problem is user skills. Users are not ready. They need to use Copilot well. Many users need training. They need to know what it can do. And how to use it right. To fix this problem. Many types of training are best. This includes a clear training plan. It has steps to learn. It starts with basics. Then moves to job-specific uses. Specific app training. And advanced topics. Like prompt engineering. One example showed a ‘Copilot Competency Pathway.’ This plan led to 78% skill. This was in three months.

Teacher-led training is also key. This means live online classes. They are for basic ideas. Workshops for specific teams. They are for job tasks. And advanced classes. These are for expert users. A company got 95% Copilot use. This was in 48 hours. They linked training to client work. Self-paced online learning. It offers flexible ways to learn. This is through short lessons. Learning paths. And interactive guides. This can link with Viva Learning’s Copilot Academy. Or Microsoft 365 Learning Pathways. A health group used a step-by-step online plan. It was for basic tasks. Job-specific uses. And advanced topics. This let users learn at their own speed.

Performance and Scalability

For big company uses. Copilot has limits. These are on speed and size. For GitHub Copilot. Specific limits include. It lacks special logic. And code quality is not steady. This needs extra checks. This is for generated code. It saves less time. More generally, only 12% of companies. They report big business value. This is from Copilot. 82% see only some value. Or they are not sure. This often happens. Companies lack clear goals. These are for how well it works. Only 10% have formal goals. And basic Microsoft 365 numbers. 38% lack them completely.

Security and rules are also big blocks. 71% of groups say this. Specific worries include. Too much content (67%). Fear of too much sharing. And losing data (63%). And tough rules to follow (43%). Groups with “easy to support” Microsoft 365. They were nine times more likely. They got big Copilot value. 55% see AI helpers as useful. But 86% want stronger tech controls. 79% fear costs getting too high. And 70% worry about too many helpers. Only 14% think current rules. They support safe use. Other AI products also cause problems. This is for 28% of companies. AI mistakes are very risky. This is in key tasks. Like legal, rules, or money uses. This needs strong prompt writing. And human checks. This prevents big problems.

Ethical AI Considerations

Good rules are vital. This is for Copilot‘s AI work. And for using it. These include being clear. Making AI systems easy to get. Being responsible. Making sure someone is in charge. This is for AI actions. Being fair. Not having bias or unfairness. And privacy and data safety. Keeping private info safe. Groups should make an AI use policy. It says what is OK for Copilot. It covers secrets. How data is handled. And good behavior rules. Policies might ban using Copilot. This is for private client details. And need human checks. This is for AI-made messages. Watching how users use Copilot. This is with tools like Purview. And audit logs. It helps make sure rules are followed. Checking logs often. It can find rule breaks. And tries to get around safety.

Microsoft‘s Responsible AI Standard. It covers ideas like fairness. Being trustworthy. Privacy. And including everyone. This standard helps groups. They follow AI laws and rules. Microsoft offers tools and ways. They help companies use responsible AI. Transparency Notes. They are part of the Responsible AI Standard. They help customers get AI systems. And how they are run. Groups should set up an Office of Responsible AI. It watches over ethics and rules. AI rule tools. Like the Microsoft Responsible AI Dashboard. They watch and manage AI systems. Microsoft wants to give trustworthy AI. It learns from research. Customer feedback. And lessons learned. This gives privacy, safety, and security.

Copilot deals with possible biases. This is in its made content. It uses several ways. Its performance is checked. It uses measures like precision and recall. This checks how good and useful suggestions are. User happiness is checked. This is through surveys and feedback. How well it works everywhere. It is checked by testing Copilot. This is across different data and tasks. Red teaming exercises. These are with outside experts. They find weak spots or biases. Copilot has a strong filter system. It blocks bad language. It stops suggestions in sensitive areas. There is constant work. This is to make this system better. It finds and removes bad content. And fixes biased, unfair, or mean results. The system also avoids risky uses. This includes bias. It makes sure it is fair. Systems are trained to find bias and abuse. This is without looking at bad content. Customs officials should make sure. Copilot uses varied and new data. This stops old biases from staying. Customs teams must check reports. These are made by Copilot. This ensures fair and right choices. Copilot‘s risk check. It should use neutral data points. Like odd transactions. Not places or business types. Customs groups should check AI-made money predictions. This confirms fair treatment. This is across different businesses.

System Integration Complexities

Putting Copilot into old company systems. It has tech problems. Copilot needs certain tech things. This is to work best. These include subscriptions. They are for Microsoft 365 for Business/Business Premium. Or Microsoft 365 E3/E5. OneDrive access. An Entra ID. And it must work with the new Outlook. Access to the Microsoft 365 and Teams App Store. It is also key for using it.

Learning skills to add business apps. And outside data connections. This is another hurdle. Connecting Copilot with business apps. Or outside data sources. This is through plug-ins and connectors. It needs new development skills. This means knowing programming languages well. APIs. And ways to connect things. Along with knowing data structures. Security rules. And following rules. Fixing errors to help Copilot users. This is also a problem. Groups must give quick help. This is for workers who find Copilot errors. This includes setting up help channels. Giving IT staff. And making guides for fixing problems. Early advice through training. And easy-to-use guides. They are also needed.

Adding Microsoft 365 Copilot. This is into current work. In a company with many systems. And many tools. It needs careful planning. Making sure it fits smoothly. It needs IT teams to work together. This is to fix tech fit problems. Data privacy and safety worries. They need tech fixes. Even if not purely tech. Copilot uses private company data. This brings up problems. These are about data leaks. Not allowed access. And following rules. Solutions mean knowing Microsoft‘s safety rules. Encryption. Multi-factor login. Safe data access. Risk checks. And access control. This is based on user roles.

Good Copilot integration. This is in a complex IT setup. It needs some things first. Customers are fully in charge. They set use rules. These match legal, contract, and rule frameworks. Identity, device, and access management. This is a shared job. Copilot works with existing Microsoft 365 setups. But it needs extra controls. These are just for Copilot. Data rules are key. This is for managing info. And boosting work. AI plug-in and data links. They mean shared responsibility. This is for managing risks. This is with outside data and systems. Agents. These are special AI helpers. They also need shared responsibility. Groups must deal with Responsible AI (RAI). And rule following. This includes specific LLM models. Or fine-tuning for special tasks. Deciding how to develop. Choosing the right tools. And starting with a small working version. These are also important. Lastly, defining tech and data needs. It means thinking about user experience. Data sources. Ways to link data sources. And automation needs.

Roadmap for Copilot for Microsoft 365

Microsoft keeps making its AI better. It has a clear plan for Copilot. This plan makes Copilot smarter. It will be more connected. It will be a key helper for users.

Better Personalization

Copilot will get very personal. A main new part is its Memory. It will build a user profile. This is based on how you use it. It learns your habits. It guesses what you need. It changes how it talks to you. This fits your life. Copilot gets info from emails. It uses chats. It uses voice commands. This builds your special profile. It uses direct info. It also uses guessed info. This makes future talks better. The helper learns over time. It changes as your life changes. This makes its advice better. This “memory” helps with ideas. It gives alerts. These are based on what you talk about. It uses past tasks. It uses work habits. It remembers things. It also gives ideas before you ask. Like reminders. Or tips to work better. These are based on your daily plans.

Advanced AI Features

Microsoft plans big steps. These are for Copilot‘s AI skills. Special versions are coming. Like Microsoft 365 Copilot for Sales. It will give better sales ideas. It will make SalesChat easy. It will have new ways to automate. It will send notifications. Microsoft 365 Copilot for Service will link to any CRM. It will sum up emails. It will draft them. Finance helpers in Microsoft 365 will automate number checks. They will get outside data. They will add more tools. This is through Copilot Studio. They will also help with calls in Teams. They will use AI to sum up market news.

Looking ahead, the plan includes:

Computer-Using Agents (CUA): These agents will do tasks. They work on Windows apps. They use sight and thought. They work with screens. Even without special links (September 2025).

Client SDK: This will put Copilot agents. They will be in Android, iOS, and Windows apps. This is for rich talks (September 2025).

Code Interpreter: Users can upload files. Like Excel, CSV, and PDF. It will use Python code to check them (September 2025 Preview). It will also make Python code. This is from normal language (August 2025 GA).

File Analysis: This will let users upload files. They can upload images. Agents will check them. They will make answers. They will send them to other systems (August 2025).

Advanced NLU Customization: This will set topics. It will set items. It uses custom data. This makes it more exact. It helps with Dynamics 365 (July 2025).

Microsoft 365 Copilot Tuning: This will train models. It uses company data. This is for special tasks. It will add them to Microsoft 365. Like Teams, Word, and Chat (June 2025 Preview).

More Connections

Copilot will connect more. It will work with all Microsoft tools. You can add to it. This lets it work with other Microsoft 365 parts. Connectors for Copilot can reach all apps. These apps work with Copilot in Microsoft 365. Copilot connectors are in Microsoft 365 Copilot. They are in Power Automate. They are in Power Apps. They are in Azure Logic Apps. This wide reach makes Copilot a helper everywhere. This plan also has special versions. Like GitHub Copilot for code help. Windows Copilot for computer help. Bing Chat (now Copilot) for web search AI. And Security Copilot for online safety.

Fixing Current Problems

Microsoft is working hard. It is fixing Copilot‘s problems. These are with being right. And being dependable. They use good ways to manage info. This makes sure company knowledge is good. It is also up-to-date. Grounding means adding info. This helps Copilot understand. It makes answers fit the company. It uses smart search. This finds company papers. It finds trusted web results.

Other ways to help:

Using many different data sets. This makes sure it is fair. It stops AI bias.

Doing things after training. Like learning with human help. This makes AI answers match human goals. It makes them better.

Adding human checks. Doctors will watch. This is for important uses.

Using clear methods. Like the Medical AI Quality Check. This checks AI answers. It looks for being right. It looks for being useful. It looks for being fair.

Always watching. Always training again. This uses new data. This makes sure results are fair. They are dependable. They are useful in clinics.

Microsoft knows Copilot‘s answers. They can be “hit or miss.” This is true for hard tasks. Like making action lists. So, people need to check. They need to fix things by hand. They also think about safety. They think about how well it works. This is when using things like Restricted SharePoint Search. It might make it less exact.

Microsoft‘s AI Plan

Microsoft sees Copilot as key. It is the main way to use AI. It is the “front door.” It helps you talk to smart tools. These are in the cloud. The idea is for “agent-run, human-led companies.” Here, AI is the base. Microsoft builds its call center service. It uses AI from the start. It does not just add AI to old systems. This uses a “human + agent” design. It brings together automation. It brings context. It brings real-time info. Copilot Studio gives an easy way. You can build AI agents. You can manage them. You can add to them. This lets you change them. This is more than what comes ready. Microsoft plans to add to Microsoft 365 Copilot. It will have agents for roles. It will have agents for tasks. (Like Copilot for Sales). It will also let users build their own.

Microsoft‘s AI plan focuses on adding AI. It uses machine learning. This is across all its products. This makes advanced tools easy to get. Azure AI Services give strong tools. They make AI easy for everyone. AI tools are in Microsoft 365 products. (Like PowerPoint, Excel). They are in GitHub Copilot. This helps with work. It helps with new ideas. The company promises to build AI ethically. It makes sure it is fair. It is clear. It is safe. Projects like Seeing AI. And Project Tokyo. They use AI for access. They use it for everyone. AI money goes to health. (Like Project InnerEye for checks). It goes to the environment. (Like AI for Earth). They keep putting money into AI research. And learning sites. Like AI University. This helps train future AI experts. AI for Good projects show a promise. They want to fix world problems. They want to make society better.

Copilot helps a lot at work. It makes things faster. But Copilot had problems. These were with data safety. And being correct. Microsoft wants to make it better. They keep working on it. The plan shows Copilot will get smarter. It will be safer too. Microsoft 365 Copilot will be a key helper. It will keep changing. This will meet future needs. Users will work better. They will get more done. Microsoft wants Copilot to be stronger. This strong tool will keep growing. Microsoft’s plan for Copilot is plain.

FAQ

What is Microsoft 365 Copilot?

Microsoft 365 Copilot is an AI helper. It works with Microsoft 365 apps. It helps with tasks. It makes content. It gives ideas. This tool helps people work better. It helps companies too.

How does Copilot enhance productivity?

Copilot makes work much faster. It sums up emails. It writes papers. It checks data. People save many hours. They can do more important work.

What are the main challenges for Copilot adoption?

Data safety is a challenge. Privacy is a worry. AI content must be right. Users need to learn skills. Connecting systems is hard. Speed and size are also issues.

How does Microsoft ensure data security with Copilot?

Microsoft uses strong safety. Data is encrypted. Access is controlled. Data is kept small. Names are hidden. Microsoft follows privacy rules. This keeps info safe.

What is the future vision for Copilot?

Microsoft wants Copilot to be personal. It will have better AI. It will connect more. Plans include sales versions. Service versions are coming. It will fix old problems.