Ever wondered why Copilot in Dynamics 365 feels generic, even when your business data is anything but? The truth is, Copilot only knows what it’s been fed—and right now, that’s a general-purpose diet. What if you could connect it to your own private, domain-specific library? In the next few minutes, we’ll walk through the exact steps to make Copilot speak your industry’s language, process your business workflows, and give you recommendations that actually make sense for your world—not some imaginary average customer.

Why Copilot Needs More Than Default Data

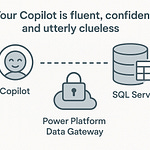

Most businesses expect AI to come in already fluent in their products, processes, and customer quirks. It’s easy to assume it will “just know” how your sales team tracks renewals or the way your supply chain handles seasonal spikes. But Dynamics 365 Copilot doesn’t start with that understanding. It begins with a broad, general-purpose knowledge base. That means it can work impressively well on common tasks, but the guidance it gives is shaped by patterns seen across all kinds of companies, not specifically yours.

This can be a bigger gap than people realize. Copilot has strong capabilities baked in, but it’s like hiring a smart generalist who’s never set foot in your industry. Point it at a customer record, and it can summarize the history neatly. Ask it to draft a follow-up, and it’ll produce a sensible email. The problem is when you push for judgment calls or predictions. The AI will fill in the blanks using what it thinks is normal — and without your business data as its primary reference, “normal” will be average, not customized.

I worked with a CRM manager recently who noticed her pipeline forecasts always felt just a bit off. The deals were real, the opportunities were correctly tagged, yet Copilot kept assigning close probabilities that didn’t make sense. It wasn’t broken — it was guessing based on generalized sales trends, not based on the way her company historically moved prospects through the funnel. What looked like a confident AI prediction was, in practice, a pattern match to someone else’s sales cycle.

ERP users have hit similar walls. One manufacturing company asked Copilot to suggest adjustments for a raw materials order, based on supplier lead times. The suggestion they got back was technically reasonable — spread the orders over a few shipments to reduce inventory holding costs — but it ignored the fact that their primary supplier actually penalized small orders with extended lead times. That critical detail lived in their internal system. Without pulling it into the AI’s view, the recommendation stayed surface-level, and acting on it would have slowed production.

That’s the inherent trade-off in Microsoft’s approach to default Copilot models. They’re built to be broadly applicable so anyone can get started without custom setup. But that design means general rather than domain-specific context. For daily reference tasks, this works fine. When you’re trying to guide high-stakes business decisions, the lack of local context can leave the advice feeling shallow or mismatched.

The point isn’t that Copilot can’t make good recommendations — it’s that the edge comes from feeding it exactly the right data. If you don’t integrate the systems that hold your company’s unique knowledge, you’re asking the AI to compete at your level while playing with a half-empty playbook. The models aren’t flawed; they just don’t know what they haven’t been told.

And this is where the conversation shifts from “Is Copilot smart enough?” to “What are we actually giving it to learn from?” Internal service metrics, long-term customer history, supplier contracts, region-specific market data — these are all invisible to Copilot until you bring them in. If you only rely on the out-of-the-box model, the AI’s answers will stay safe, generic, and uninspired. The moment you feed it the depth of your own business, that’s when it starts to sound like a seasoned insider instead of a well-meaning consultant.

The bottom line: Copilot’s brain is only as sharp as the library you stock. Think of every missing dataset as a missing chapter, and every integration as adding pages to its reference book. The next step is understanding exactly how to link those external chapters into its library without losing control of your data — and it all starts with knowing where the connection points live inside your architecture.

Mapping the Data Flow from Your Systems to Copilot

If you asked most teams to draw the path their external datasets take into Copilot’s “thinking space,” you’d probably get a couple of arrows ending in a big question mark. The data’s in your systems, it shows up somewhere in Dynamics, and Copilot uses it — but the actual journey is rarely mapped out clearly. And that’s a problem, because without seeing the path, it’s impossible to know where context might be lost or security controls could be tightened.

The first thing to understand is this isn’t magic. Copilot doesn’t automatically have a clear window into every database or application you own. You have to design a proper integration pipeline. That means deliberately choosing how data will leave your systems, which route it will take, and how it will arrive in a form that Copilot can weave into its prompt responses. It’s not a one-click import — there are defined entry points and each has its own rules.

Think of it like giving a trusted assistant a secure key to one filing cabinet, not a badge that opens every door in the building. You want them to have what they need to do their job well, but not unfettered access. This is where controlled connectors and APIs come in. They give you a targeted, secure way to pass exactly the information you choose into Dynamics without exposing everything else.

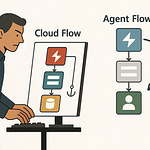

In practical terms, you’ve got a few main “highways” available. APIs allow you to build very specific flows from an existing application into Dynamics. Dataverse connectors sit inside the Microsoft ecosystem and can map external data sources directly into the Dataverse tables that Dynamics uses. Azure Data Lake is another common route — especially for larger or more complex datasets — where you can stage and preprocess data before it gets into Dataverse. No matter which option you pick, the goal is the same: get the right data to the right place while maintaining control.

If you were to sketch the diagram, it would start with your source system — maybe that’s a legacy ERP or a bespoke inventory tracker. From there, a secure connector or API endpoint acts as the gatekeeper. The data travels through it into Dataverse, which is where Dynamics stores and organizes it in a way Copilot can access. Once it’s in Dataverse, relevant pieces of that dataset can be pulled directly into Copilot’s prompt context during a user interaction. The entire chain matters, because if you break it down, every step is a point where data could be transformed, filtered, or misaligned.

There’s also a checkpoint most people forget: ingestion filters. These are the rules that run on the incoming data before it settles in Dataverse. They can strip out sensitive fields, standardize formats, or reject entries that don’t match your validation logic. They might seem like an afterthought, but they’re your first defense against sending the wrong information into the AI layer.

One of the earliest decisions you’ll face is timing. Do you need that external data flowing in real time so Copilot is always using the freshest numbers, or is a scheduled sync enough for your business case? Real-time integration sounds ideal, but it’s more resource-intensive and can introduce unnecessary complexity if your use case doesn’t actually require up-to-the-minute updates. Scheduled pushes, on the other hand, are simpler to manage but may leave a small lag between reality and what Copilot knows.

Mapping this architecture in detail isn’t just an IT exercise. Once you can see the start and end points, along with every gate and filter in between, it’s much easier to understand why a particular insight from Copilot feels incomplete — or how to feed it more useful context without opening the floodgates. And once the data path is defined, the next challenge is just as critical: making sure that new bridge you’ve built is locked down tight enough to meet every compliance rule you’re working under.

Building a Secure Data Bridge Without Breaking Compliance

You can open the door to your data, but the moment you do, the real question is whether the locks still work. Every organization has some form of regulated or sensitive information—customer details, financial results, contract terms—and the rules around sharing them don’t vanish just because you’re integrating with Copilot. In fact, when you start streaming data into an AI-driven workflow, the risk of moving something into the wrong context goes up, not down.

Misconfiguring a connector is one of the easiest ways this can happen. You might assume that because you’ve set up the connection to Dataverse, only the data you want will travel through. But unless the mapping and filters have been specifically designed, a field containing something sensitive—say, employee salaries—could slip in alongside the allowed fields. And once it’s part of the dataset feeding Copilot, it could be referenced in a summary or report where it shouldn’t exist.

Take a finance team wanting to feed their monthly revenue numbers into Copilot to help generate real-time performance overviews. Sounds harmless enough. But those same numbers fall under SOX compliance. That means they must be protected against unauthorized access and tracked for audit purposes. Simply pulling the data in without additional safeguards could make the company fail an audit before anyone even uses the AI’s output.

This is where role-based access control steps in. Instead of just connecting the data source, you define which users in Dynamics can even touch specific fields. Only finance managers might see the revenue figures. Sales might get totals without any breakdown. Copilot respects these permissions, so when a salesperson asks a question, the AI won’t have the authority to answer with data outside their role’s scope.

Conditional access policies work alongside that. Think of them as the situational rules—maybe a manager can access sensitive reports, but only from a corporate device on a trusted network. If they’re at home on a personal laptop, the connector won’t serve that data to Copilot. It’s an extra layer that stops leakage across environments you can’t control.

Encryption is another non-negotiable. Data in transit—from your source system to Dataverse—needs to be encrypted so it’s unreadable to anyone intercepting the stream. The same goes for encryption at rest, which covers storage in Dataverse and any staging layers like Azure Data Lake. Without it, you’ve left a gap for attackers to exploit.

Audit logging ties the whole compliance story together. Every pull or push of data into Dynamics should have a recorded entry—timestamped, user-attributed, and noting which fields were involved. If a regulator or internal auditor asks who saw what and when, you have a trail to follow. It’s not glamorous work, but without it, even the best technical controls are harder to prove in practice.

The easy mistakes to make here are almost always about “too much” rather than “too little.” Pulling entire tables when only three columns are actually needed. Bypassing approval workflows because the integration “just needs to go live today.” Letting testing environments keep the same data permissions as production because it’s faster. Each shortcut widens the surface area where compliance can break.

When you get this setup right, the connector isn’t a simple data pump—it’s an intelligent filter. It decides not just what travels to Copilot, but in turn, what kinds of questions Copilot can even answer. If the sensitive revenue column isn’t in its scope for a given user, the AI literally doesn’t have the information to reference, no matter how direct the query.

With the bridge locked down, the next obstacle is a different kind of challenge. Even fully compliant, well-secured data can fall flat if Copilot can’t interpret it correctly. Once you know the right people are seeing the right data safely, the focus shifts to making sure that data actually speaks the AI’s language.

Structuring External Datasets for AI Understanding

Just because your dataset is secure and flowing into Dataverse doesn’t mean Copilot will actually know what to do with it. AI doesn’t “figure it out” in the same way a person might. It looks for structure, labels, and patterns it can match against. If those are missing or inconsistent, you’ll end up with responses that feel vague, incomplete, or just plain wrong—even if the raw data is technically there.

The reality is, content alone isn’t enough. Think about how you store information for humans. You can put a spreadsheet in front of someone with thirty tabs and a few cryptic column headers, and a human might still piece it together after some back-and-forth. AI doesn’t get that conversation. It needs datasets arranged in a way that eliminates ambiguity. Labels have to be precise, relationships between fields have to be clear, and the formatting needs to stay predictable.

I once saw a CRM export from a sales team that had well over fifty columns. The headers were mostly two- or three-letter abbreviations, half of which nobody could remember the original meaning of. That’s survivable for a long-time account manager—they know “PRJ_STS” is project status without thinking about it. For Copilot, though, that’s just another opaque string. Unless you explicitly define it in a way the system can map to a known concept, the AI has no context and treats it like an arbitrary label.

This is why metadata matters as much as the core numbers or text. Metadata defines what the field represents, how it’s measured, and how it relates to other parts of the system. The same goes for taxonomies and semantic labeling—organizing data according to a shared vocabulary that is both human-readable and machine-usable. When you map those external fields into the Dataverse schema, you’re giving Copilot a clear set of instructions on how to interpret and retrieve that field when building a response.

Without that mapping, you can run into a different sort of failure. You might spend hours on prompt engineering—carefully writing queries to get exactly what you want—only to find Copilot still can’t produce a meaningful answer. In many cases, the issue isn’t the prompt at all. It’s that the underlying data doesn’t line up with the way the AI parses the question. The field exists, but it isn’t stored, named, or connected in a way that the model can pull into its reasoning.

There’s a constant balancing act here between designing storage for people and designing for AI. Human-oriented storage might prioritize readability, grouping, or custom naming conventions. AI-oriented storage cares about consistency, clarity, and relational mapping. Sometimes you can have both; other times, you may need to introduce parallel structures or additional metadata layers so each type of “user”—human or AI—gets the format they operate best in.

When you aim for AI readability, you’re not just making it easier for Copilot to grab data. You’re also reducing the cognitive load in interpreting results. If the AI knows exactly which “status” field to use because there’s only one defined in its schema mapping, it won’t mistakenly pull something from a different table that happens to have the same name. That cuts down on those moments where the summary sounds confident but doesn’t match reality.

Clean, structured, consistently labeled data flips the switch from “dataset Copilot happens to have” to “dataset Copilot genuinely understands.” And when it understands, you get recommendations that reflect the actual context you’ve worked so hard to feed into the system. Otherwise, you’re just handing over a pile of facts and hoping the connections will appear on their own.

Once your datasets are structured this way, you’ve removed one of the AI’s biggest blind spots. But connected, clean, and mapped data isn’t a one-time job—it needs ongoing monitoring to keep that understanding from degrading as your systems evolve and your workflows change.

Conclusion

Copilot’s real value doesn’t come from some hidden algorithmic magic. It comes from the quality, relevance, and structure of the data you put in. If you feed it generic, you get generic. If you give it well-mapped, business-specific context, you get responses that sound like they came from someone who’s worked in your company for years.

So map your data flows. Find the datasets that truly drive decisions. Secure them, clean them, and integrate them. The AI future in CRM and ERP won’t be won by those with the shiniest features, but by those who teach their Copilot their business language.