Summary

Running Copilot Studio vs. Azure AI Foundry is about understanding when speed wins and when control matters. In this episode, I dig into how most bots act like parrots — they sound smart but lack grounding in your actual business data. What you need is Retrieval Augmented Generation (RAG) — combining search + LLM to have a bot that answers from your tenant, not the internet at large.

We’ll compare two Microsoft platforms: Copilot Studio, the low-code, fast prototype tool, vs Azure AI Foundry, the developer-focused, fully controlled environment. You’ll learn how they differ in connectors, governance, model tuning, auditability, and when to escalate from one to the other.

By the end, you’ll have a framework (Explore, Scale, Govern) for deciding which to use when — and how to avoid holes in your AI rollout that bite you when the stakes get higher.

What You’ll Learn

Why most bots fail: they lack grounding in your real data

How RAG (search + LLM) changes the trust equation

Key strengths & tradeoffs of Copilot Studio (fast, low-code, limited control)

Key strengths & tradeoffs of Azure AI Foundry (full control, model choice, pipelines, audit)

The three-tier lifecycle: Explore → Scale → Govern

Hidden gotchas: connectors, cost creep, lack of prompt ergonomics, governance gaps

When and how to migrate from Studio to Foundry

Ever notice your shiny AI bot knows everything—except the stuff your team actually needs? That’s because most copilots are parrots with internet degrees. Powerful models, sure, but without grounding they chase trivia instead of your business data. What you really want is Retrieval Augmented Generation—RAG, not the shredded T-shirt kind. RAG = search + LLM: the model writes answers only after searching indexed company content like SharePoint, Dataverse, or OneDrive.

That’s the key difference between a demo that looks clever and a system you’d actually trust. And it sets up the fight ahead—Copilot Studio versus Azure AI Foundry. Subscribe at m365.show for the cheat sheet.

Why Most Bots Are Just Fancy Parrots

Most bots look impressive in a demo, but ask them something real—like company policy or project status—and they crumble. Here’s the problem: they’re just large language models with no wiring into your tenant data. They’re experts at making up answers that *sound* official, yet those answers don’t help your business. You wanted the PTO policy from HR; it handed you a generic blurb about “work-life balance” scraped off the internet. Great pep talk, but useless in production. The root cause? The bot isn’t pulling from the same content your team actually works in day to day.

The real fix is Retrieval Augmented Generation—or RAG. Sounds like laundry day, but think of it as a combo move: search plus a large language model. You stop the model from free‑styling and instead feed it a search pipeline. It still writes the response, but only after it pulls from indexed sources inside your environment—SharePoint, OneDrive, Dataverse, or even that Teams site everyone swore they’d archive in 2019. With that setup, the bot finally stops improvising and starts acting like it belongs in your tenant.

Without RAG, the risks pile up fast. A plain LLM is trained on internet mush. Ask it about HR leave policy, and it might give you something that sounds correct but is completely off base—sometimes wildly wrong. That’s not just embarrassing; it’s dangerous. You don’t want a shiny chatbot spitting out invalid compliance info to thousands of employees before HR even knows it happened. Microsoft Digital ran into this exact risk when building HR and IT copilots. Their solution? Add authoritative source guidance and connector work in Studio to reduce the bad answers. That’s the real-world play: RAG isn’t just theory, it’s the difference between a bot you roll out and a bot you quietly turn off.

Here’s the other piece of the puzzle: access control. RAG isn’t just about better search—it’s about safe search. Think of it like a nightclub bouncer. The system does the lookup, but before any fact gets in the answer, the bouncer checks the user’s ID. Finance sees finance data, sales sees sales collateral, and nobody gets a sneak peek at board memos they don’t have rights to. Proper RAG plus access control stops most of the messy cross‑tenant leaks—but only if permissions and indexing are wired in correctly. That caveat is critical: without it, you’re right back to a hallucination engine with corporate branding.

To make it concrete, picture two employees: one in sales, one in finance. They both use the same bot to ask about quarterly numbers. Sales sees the sanitized public report; finance sees the detailed accounts tucked away in their secure folder. Same index, same bot, totally different answers because of the bouncer at the door. That identity‑aware retrieval is what closes the trust gap for CIOs who hear the phrase “bring your own AI” and instantly picture auditors lining up outside their office.

Bottom line: RAG is what turns a parrot into a real assistant. Done right, you get answers drawn from your tenant, filtered through your permissions, and grounded in sources that exist. Done sloppy, you’re just babysitting another hallucination machine wearing a slick UI.

And here’s where things get interesting. Both Copilot Studio and Azure AI Foundry use RAG ideas, but the way they hook in permissions and search pipelines is very different. One leans on speed and simplicity, the other on control and depth. So, let’s start with the faster option—the one promising quick wins without touching a line of code.

Copilot Studio: Quick Wins With Training Wheels

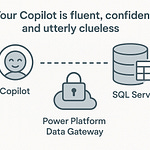

Copilot Studio is where Microsoft makes good on the promise of quick wins. It’s their low‑code playground for building copilots, and honestly, it’s shockingly fast to get something working. You log in, pick a starter template, hook it up to your data with one of more than 1,000 connectors (SharePoint, Dynamics, ServiceNow, Excel in OneDrive, etc.), and suddenly you’ve got a chatbot answering questions like it’s been in the company for years. No code, no build chains, no dev backlog—it just works. The first time you try it, it feels suspiciously like magic.

The real draw here is time‑to‑value. You’re not waiting half a year to see if it sticks. I’ve seen small IT teams roll out live helpdesk bots in under two weeks, mostly by mapping existing SharePoint pages into conversational flows. The connectors did the actual work; the team just pointed the bot at the right content. And end‑users? They either didn’t realize or didn’t care it wasn’t a person on the other end—huge compliment if you’ve dealt with IT inbox turnaround times. Passed the smell test right out of the gate.

But speed comes with trade‑offs. Studio intentionally hides most of the advanced dials. You don’t get access to temperature sliders, top‑p tweaks, prompt versioning, or evaluation gates. In plain English: you can’t control how “creative” it is, you can’t A/B test prompts, and you can’t lock down guardrails to stop drift over time. Microsoft did this on purpose—keeping it low‑code means keeping the complexity out. Still, for anyone used to calling API shots directly, it feels a little like driving a car where the manufacturer welded the hood shut. You’ll get from point A to point B, but don’t ask about turbo tuning.

Now, Microsoft has beefed it up with better brains under the hood. Recent updates dropped GPT‑5 into Copilot Studio along with smart model routing, which means the platform can automatically switch between “fast” or “deeper reasoning” depending on the question. Out of the box, that’s good news—low‑code bots suddenly sound sharper without any config. But even with GPT‑5 inside, you still can’t touch those exposed parameters. That’s the design choice—keep it accessible, not adjustable.

Here’s a metaphor I like: Copilot Studio is Ikea furniture. You follow a flat‑pack set of instructions and end up with something that looks right and functions fine. It’s brilliant for the price and easy to assemble. But move it once, or try to scale it past that one prototype, and the cracks show up quick. The screws wiggle, the panels bow, and suddenly you’re living with a chatbot everyone uses—but nobody dares update.

To its credit, Microsoft added guardrails at this level too. The “Authoritative Source” badge is a lifesaver, because without it, every response looks equally legitimate to the average employee. That SharePoint FAQ written in 2009 carries the same weight as the official HR handbook updated this morning. With authoritative tagging baked in, at least you can show, “This came from HR, ignore the rest.” Microsoft Digital leaned hard on this in their own HR and IT bots because without it, employees were taking creative model answers as policy gospel—and then flooding real help desks with tickets to double‑check.

The limits get obvious when you step outside Microsoft land. Yes, there are more than 1,000 connectors, but they aren’t all plug‑and‑play miracles. Want to hit Salesforce or SuccessFactors? Doable, but not smooth. Microsoft themselves had to extend and enhance their ServiceNow and SuccessFactors connectors during internal rollouts—metadata extensions and custom API shaping—because off‑the‑shelf connectors weren’t enterprise‑ready. That’s where the “duct‑taped plumber at 2 a.m.” feeling kicks in. You think you’ve closed one gap, then find three more dripping. With no deep pipeline control, the patches often feel like hacks.

Which brings us to the obvious truth: Studio is perfect for lightweight cases. Internal IT and HR bots, team‑facing FAQs, basic guest Q&A, or anything you want live in Teams and Outlook with minimal fuss—that’s where it shines. It’s a fantastic proving ground to show leadership a working bot quickly and keep them invested. But don’t mistake it for an enterprise platform. Once the conversation turns to compliance checks, multi‑system orchestration, or tenant‑level governance, Studio starts to buckle. And the same speed that impressed everyone on day one becomes a liability when you hear the words “security review” or “audit trail.”

Bottom line? Copilot Studio gets you in the game faster than any other option, but you’re pedaling with training wheels. That’s by design—Microsoft built it to make AI approachable for business users and to shortcut pilots. But if your roadmap reaches into regulated industries, private data lakes, or cross‑platform compliance, you’ll quickly need something sturdier. And that brings us to the other side of the spectrum—the heavyweight option built not for hobbyists, but for enterprises that need full control.

Azure AI Foundry: The Enterprise AI Factory

Azure AI Foundry is where the training wheels come off. Think less “weekend project,” more “enterprise factory floor.” If Studio is Ikea furniture, Foundry is the machine shop where you don’t just build the table—you cast the bolts yourself. It’s a code‑first environment, unapologetically so, and it exposes a massive model catalog: 11K+ models, covering the GPT‑5 family, open‑source weights, plus vision and audio engines. You can pull from big names like Mistral, Cohere, Meta, Hugging Face, and NVIDIA. It’s a buffet, but instead of dragging and dropping, you’re wiring everything into data pipelines and governance layers while muttering at YAML files.

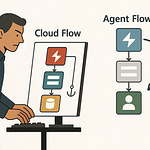

Foundry makes no effort to hide its audience. It assumes you speak Python, live in GitHub, and don’t panic when someone says “CI/CD pipeline.” In return, you get full lifecycle control: pick models, fine‑tune them, prompt‑test them, and promote them through dev, test, and prod with proper MLOps. In plain English, you finally get to stop duct‑taping flows together and can build a real release process—auditing, rollbacks, and all.

With control comes cost in time and skill. You’re not slapping together a chatbot over a long weekend here. Foundry makes you crawl through every layer of the wiring. The payoff? Absolute precision. Every parameter is exposed. You can drop creativity to zero, cap tokens, and measure hallucination rates like you’re running lab trials instead of casual demos. When governance calls—and you know they will—you’re not hand‑waving: you’ve got audit logs, evaluation reports, and explainable outputs.

Here’s the quick list so it lands: what Foundry gives you—model choice and fine‑tuning, grounding into your data lakes, MLOps with proper pipelines, CI/CD for deployment, and full telemetry and audit trails. That’s what keeps compliance folks from breathing down your neck while still giving your devs room to innovate. And that’s the trigger line: choose Foundry when your bot touches sensitive data, when you need explainability, or when it has to stitch across multiple enterprise systems. If sovereignty and trust matter, this is the platform.

I’ve seen Foundry nail cases Studio couldn’t touch. One client needed to expose sensitive research docs but track exactly which employee saw what, down to the paragraph. In Foundry, we indexed the private data lake, hooked it to Azure Cognitive Search, and logged every query back to user identity and document ID. Compliance didn’t just nod—they smiled, because they finally had visibility that passed audit in black and white. Good luck trying that with Studio’s out‑of‑the‑box connectors.

Metaphor time: Foundry is like leasing a Formula 1 garage. Every machine, every sensor, every part is included. But here’s the catch—you need the pit crew who knows how to run it. You don’t just “spin up a car.” You orchestrate the engines, manage telemetry, and tweak builds for performance at scale. Get it right, and you’re lapping competition. Get it wrong, and you’ll stand next to a million‑dollar toolbox while your car’s still on blocks.

The real edge is orchestration. Foundry isn’t just about tossing prompts at a model. You’re stitching together agents, APIs, search, and evaluation layers into a single managed flow. It can hit REST endpoints mid‑conversation, ground itself in structured and unstructured sources, and manage the entire lifecycle with continuous integration. That flexibility is why industries like manufacturing, finance, and healthcare treat Foundry as the serious option. It’s not locked to Microsoft‑only connectors—it’s built for the messy, multi‑system sprawl that CIOs pretend is “just a quick integration.”

The common adoption pattern proves the point. Most businesses don’t start here. They dabble in Studio because it delivers a shiny pilot fast. Then someone in risk or compliance asks about data lineage, and suddenly the team is scrambling for auditability Studio never promised. That’s when the project escalates to Foundry—it’s either migrate or shut it down.

So yes, Foundry is the heavy‑duty play. Slower to spin up, steeper learning curve, bigger cost in time and skills. But you get every dial and every safeguard that Studio hides. And you’re not forced to pick one over the other. Microsoft itself is pushing a hybrid model: Studio as the easy interface up front, Foundry as the engine under the hood, tied together with the coming Microsoft 365 Agents SDK. That way you get speed where it matters, control where it counts.

Which raises the real question: if you’ve got both tools on the shelf, how do you actually decide when to use which—without sinking into endless debates? That’s where we need a simple framework, so you can focus less on analysis paralysis and more on delivering results.

The Three-Tier Framework: Explore, Scale, Govern

The easiest way to bring order to the Studio vs. Foundry debate is to stop treating it like a binary choice. Microsoft insiders already frame it as a three‑tier lifecycle: Explore, Scale, Govern. Think of it less as “pick a tool and pray” and more as a progression—where each tier tells you when to move up, not if.

Explore is always the starting point, and Copilot Studio owns this space. This is the tier where you hack together quick bots to see if an idea has any business value. HR FAQ bot? IT password reset helper? You can light those up in under two weeks with connectors and zero code. The point isn’t polish—it’s proof. You’re validating if the thing solves real problems without draining a project budget. The research‑tested starter checklist is simple: identify your top three use cases, prototype them in Studio in one to two weeks, and measure whether people actually use the bot. Bonus tip—track token consumption to spot if the pilot has runaway costs before scaling.

But here’s where migration trigger number one shows up: if a bot suddenly needs to touch non‑M365 systems, like ERP, SAP, or finance databases, Studio alone won’t cut it. Migration trigger two: if hallucinations aren’t just funny but risky—like answering compliance questions with made‑up policies—it’s time to move to Scale.

Scale is where most teams feel the pinch. You realize the pilot is helpful, but now it needs real infrastructure. Imagine trying to tape your HR bot into ServiceNow with low‑code connectors—you’re basically driving a car held together by Velcro. This is where Azure AI Foundry enters. Foundry gives you what Studio hides: creativity controls, evaluation gates to catch hallucinations, data lake connectors, and robust pipelines. The tradeoff is you need the skills and patience to wire it all in. Most companies run a hybrid approach here—keep the fast turnarounds in Studio for FAQs and lightweight flows, but shift serious workloads to Foundry where security and precision matter.

And then comes the big one: Govern. This tier is where the AI isn’t a toy anymore—it’s plugged into sensitive workflows, or even customer‑facing systems. If audits, SLAs, or regional data controls are in the mix, Foundry stops being optional. This is migration trigger three—when the conversation moves to sovereignty, explainability, or record‑level access logs, you’ve got no choice but to anchor the project inside Foundry. Foundry covers you with audit trails, telemetry, pipeline gating, and data governance Microsoft 365 connectors were never designed for. By this stage, Studio simply isn’t in the room.

Here’s an easy analogy: Explore is like tearing around an empty parking lot to see if the car drives. Scale is merging onto the freeway with other drivers, meaning mistakes actually hurt. Govern is the emissions test—every sensor plugged in to prove it’s legitimately road‑safe. Three stages, each with its own purpose, none of them optional if you want the end result to survive outside a demo.

For proof that this pattern works, just look at Microsoft’s own internal playbooks. Their HR team built entry‑level bots in Studio to serve FAQs and free up ticket queues. But when the same bots started pulling sensitive region‑specific HR files, compliance raised the flag. That forced the migration into Foundry—so they could inject stronger access checks, guarantee audit logs, and make sure sensitive data was partitioned correctly. Nothing failed—scope just grew beyond what Studio was designed to handle.

The other piece worth calling out is the bridge Microsoft is building. The upcoming Microsoft 365 Agents SDK is meant to connect Studio and Foundry more tightly, so you can start lightweight without fear you’ll be painted into a corner. Build the prototype in Studio, extend it with Foundry’s model routing, connectors, and governance, all staged in one lifecycle. It’s a rare moment where Microsoft admits business users and pro‑devs aren’t enemies, and gives you a pipeline where they actually collaborate.

So what’s the takeaway? Don’t obsess about which tool you “should” pick on day one. Focus instead on which tier you’re in. Explore = Studio to validate fast. Scale = add Foundry where complexity or non‑M365 data enters. Govern = fully Foundry for compliance, audits, and enterprise‑grade workflows. The framework keeps you from overbuilding that first FAQ bot, and underbuilding the ones that end up in customer‑facing systems.

Of course, none of this means the platforms are perfect. The frameworks look neat on PowerPoint, but the reality is each tier also brings its own blind spots. And that’s where the headaches usually start—the parts Microsoft marketing doesn’t exactly highlight in bold on the Ignite slides.

The Hidden Gotchas Microsoft Glosses Over

Let’s talk about the stuff Microsoft doesn’t highlight until you’re already knee‑deep in a rollout: the hidden gotchas. On the demo stage, everything looks glossy—drag, drop, and voilà, instant copilot. But in production, three recurring gaps catch teams off guard.

Gotcha number one: Copilot Studio hides all the model control knobs. No temperature setting, no top‑p slider, no evaluation gates, no prompt versioning. To most IT folks building a pilot FAQ bot, that sounds like harmless trivia. But those levers decide whether your agent gives consistent results or behaves like it’s pulling fortunes out of a vending machine. Without those dials, you can’t reduce randomness or lock down explainability. Remedy: if reliability matters, offload those critical workloads to Foundry. There, you can set temperature to zero for consistency, freeze prompts, and run every output through an evaluation gate before it reaches users. Studio is fine for lightweight FAQs, but don’t kid yourself that it scales cleanly into HR or compliance workflows.

Gotcha number two: both Studio and Foundry use consumption‑based pricing, and that’s where many projects quietly bleed budget. “Pay for what you use” sounds painless until you’re processing thousands of queries and realize token usage and model mix dictate your bill. In Studio, cost creep tends to blindside teams when usage spikes and they haven’t budgeted for governance or licensing on top of token spend. Foundry forces you to feel that pain earlier because you’re allocating dev hours, but at least the scale expenses are visible. Remedy: instrument usage metrics from day one. Track token consumption, and route high‑volume or lightweight queries to cheaper models in the catalog. And most importantly, don’t forget the hidden parts of the bill—licensing, governance tooling, custom connectors—all of that affects ROI once you project scale. Budget for it early instead of explaining overruns later.

Gotcha number three: connectors. Yes, Studio waves around “more than 1,000 connectors,” but that doesn’t mean they’re enterprise‑ready out of the box. Some are fine for prototypes—like SharePoint, OneDrive, or Dynamics—but others often need metadata tweaks or custom engineering. Microsoft Digital’s own rollout proved it: their team extended ServiceNow and SuccessFactors connectors and added an “Authoritative Source” badge so users could tell official HR policy apart from whatever was rotting in a legacy wiki. It worked, but only because engineers dug in. Remedy here is to assume you’ll need tuning work. Don’t treat Studio connectors as plug‑and‑play for critical systems. Roadmap time and resources for extending them, or pivot the workload to Foundry where custom APIs and pipelines are first‑class citizens.

Put simply, the real risks aren’t in flashy demos—they’re in scale. Studio hides the controls you’ll want later, pricing models can surprise you fast, and connectors that look good in a setup wizard often need deeper wiring for the enterprise. None of these gotchas are deal‑breakers if you plan for them. Studio’s simplicity is still great for prototyping and lightweight scenarios, and Foundry’s depth covers compliance and governance. But you have to recognize where the rough edges live and decide if you’ll patch them yourself or escalate before it breaks in front of users.

So here’s the calm takeaway: audit usage early, protect sensitive flows with the right platform, and budget not just for licensing but for governance and connector work. Studio buys you speed. Foundry buys you control. The pain just comes from different angles depending on which you ignore.

And with that perspective, we’re ready to zoom out and land on the essential question: how to make sense of both without overthinking the choice.

Conclusion

Pick your poison wisely, but here’s the short version. Studio is for fast prototypes and Microsoft 365 reach—perfect when you just need proof that a bot saves time. Foundry is for control, models, and governance—it’s where you tune the engine and keep compliance teams from chasing you down the hall. And the real play is stacking them: Explore in Studio, Scale into Foundry, and Govern there when the auditors show up.

Subscribe at m365.show for the cheat sheet, and follow the M365.Show LinkedIn page for livestreams with MVPs who’ve shipped this in production. Realistic plan: start small, measure actual risk and token spend, then graduate to Foundry when compliance or complexity demand it. You’ll save headaches by planning the stack ahead.

And one last thing, please subscribe the Podcast and leave me a review!