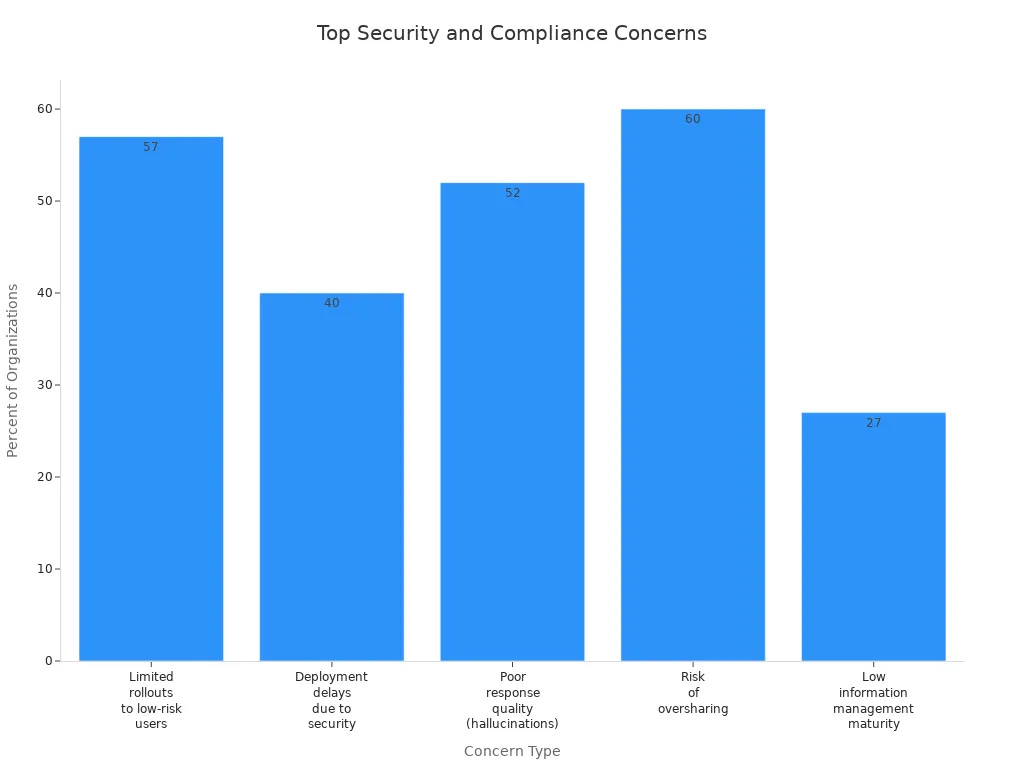

Organizations are using AI technologies more and more. This helps them work better. Nearly 70% of Fortune 500 companies are using Microsoft 365 Copilot for AI as of 2024. This shows a bigger change. About 75% of companies asked have added generative AI to their work. But, as you look into these changes, remember the security and compliance problems that come with it. More than 60% of organizations say they face risks from oversharing and unauthorized access when they start using it. This shows the need for strong governance plans.

Key Takeaways

Microsoft 365 Copilot helps people work better. It automates tasks and gives useful insights. This makes work easier for everyone.

Strong security steps, like encryption and access controls, keep sensitive data safe. They also help follow the rules.

Regular training and awareness programs teach users how to use Copilot safely. They show how to use it responsibly.

Having clear rules is important. These rules help manage who can access data. They also lower the chances of sharing too much information.

Ongoing monitoring and audits make security better. They help with following the rules. This helps organizations keep up with changing regulations.

Microsoft 365 Copilot Overview

Microsoft 365 Copilot changes how you work. It adds smart AI tools to your favorite Microsoft 365 apps. This tool helps you get more done and makes your work easier. It is very important for today’s businesses.

Key Features

Microsoft 365 Copilot has many special features that make it different from other AI tools:

Seamless Integration: Works inside Microsoft 365 apps, keeping data connected.

Advanced Collaboration: Helps with meeting notes, action items, and follow-ups in Teams.

Task Automation: Handles complex tasks like financial reports in Excel and writing in Word and PowerPoint.

Data-Driven Insights: Uses Microsoft Graph for personalized help and better efficiency.

Business-Specific Support: Changes language and format for professional use.

These features help you work smarter by automating tasks and giving you useful insights.

Integration with Microsoft 365

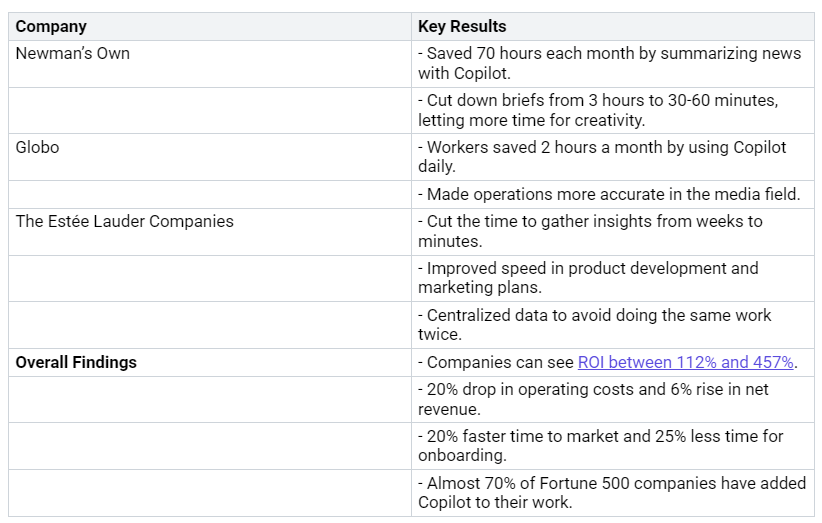

Using Copilot in Microsoft 365 greatly increases productivity. Recent studies show great results from companies using this tool:

These results show that Microsoft 365 Copilot boosts personal productivity and helps organizations work better.

Security Architecture of Copilot

Microsoft 365 Copilot has a strong security setup. This setup is made to keep your organization’s data safe. It includes different ways to protect data and controls for user access. These features help keep your information secure and follow the rules.

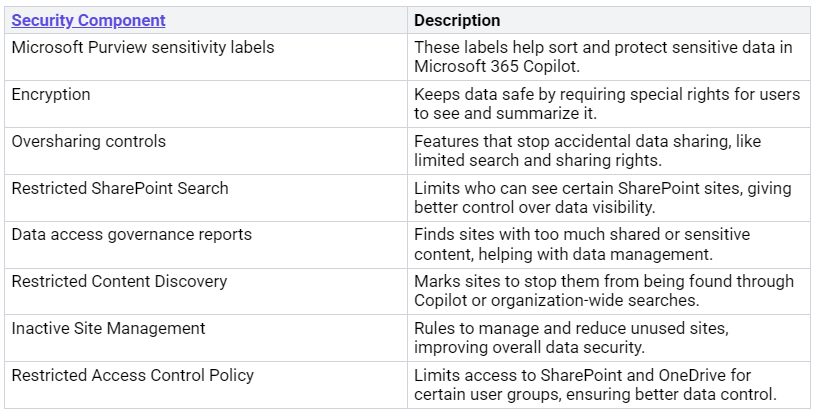

Data Protection Mechanisms

To protect your data, Microsoft 365 Copilot uses several important security parts:

These parts work together to create a safe space for your data. Encryption is very important in this setup. Microsoft 365 Copilot uses BitLocker to encrypt customer content when it is stored and TLS to protect data while it is being sent. This keeps your data safe from unauthorized access both when it is stored and when it is shared.

Also, tenant isolation is a key part of data security in Microsoft 365 Copilot. The system only uses data from your current M365 tenant. This means it does not look at or share data from other tenants, even if you have guest access. These steps are crucial to stop data leaks between organizations and keep your sensitive information safe.

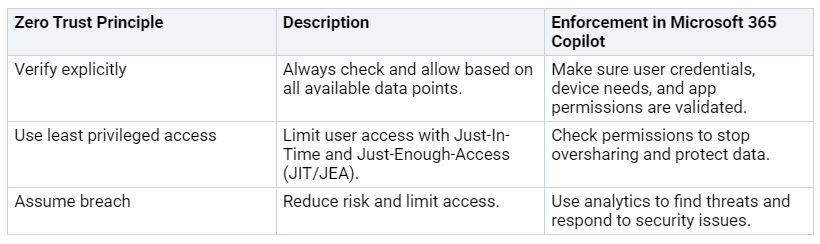

User Authentication Controls

User authentication is another important security layer in Microsoft 365 Copilot. The platform follows Zero Trust rules to make sure only allowed users can see sensitive data. Here’s how these rules work:

By using these controls, Microsoft 365 Copilot helps your organization manage access to sensitive data while reducing possible threats. This careful approach to security helps you follow rules and protects your organization from data breaches.

Data Oversharing Risks

Data oversharing can cause big problems in Microsoft 365 Copilot. Knowing these risks helps you protect important information and stay within the rules.

Sensitivity Labels

Sensitivity labels are very important for reducing data oversharing risks. They help you sort and protect sensitive information well. Here’s how they work:

By using sensitivity labels, you can make sure Microsoft 365 Copilot follows the rules for labeling and Data Loss Prevention (DLP). The AI tool checks prompts and answers to see if they follow these labels. For example, DLP rules can stop Copilot from handling sensitive files marked ‘Highly Confidential.’ This careful method greatly lowers the chance of oversharing.

Access Control Issues

Access control problems often cause data oversharing. Here are some common reasons:

Site privacy settings are set to ‘public’ instead of ‘private.’

Default file-sharing options let everyone in the organization access data.

There are no sensitivity labels.

These issues can let unauthorized people see sensitive information. In fact, 95% of permissions in Microsoft 365 are not used, showing a big over-provisioning problem. Also, 90% of users only use 5% of their permissions, which shows problems in access control.

The effects of these oversharing risks can be serious. Wrong privacy settings can let unauthorized people access private data. Better search features might show documents that users should not see or change. Unclear access rules can lead to sensitive data being shared with the wrong people. So, it is very important to do regular risk checks and have strong data management practices to reduce these risks effectively.

Governance Strategies for Security

Good governance strategies are very important for keeping Microsoft 365 Copilot safe and following the rules. By making strong policies and checking things regularly, you can use this powerful tool safely and correctly.

Policy Development

Creating clear policies is key to managing Microsoft 365 Copilot well. Here are some important strategies to think about:

By using a unified governance framework across all Microsoft 365 apps, you can create a clear way to handle data classification, retention, and access controls. Choosing governance champions helps team leaders guide compliance efforts. Regularly checking and updating your governance policies will help you keep up with changes in the digital world.

Monitoring and Auditing Practices

Regular monitoring is very important for improving security and compliance for Microsoft 365 Copilot. It helps ensure everything runs well through operational checks and compliance audits. By using analytics and real-time data, you can get useful insights that improve governance and how you use Copilot. Here are some key parts of good monitoring and auditing practices:

Check and improve performance for compliance and ongoing improvement.

Use analytics, dashboards, and real-time data for useful insights.

Track and audit Copilot Studio activities, data changes, and user actions.

Microsoft 365 Copilot is built to quickly spot unusual activity and breaches. It follows Zero Trust rules, checking every connection and resource request. This method combines advanced monitoring with tools like Information Rights Management for safe data use. Automated security policy enforcement and real-time protocols let you deal with threats right away.

To track user activity well, you can look at logs through the Microsoft Purview compliance portal. Here’s a quick overview of the auditing practices:

By using these governance strategies, you can make sure that Microsoft 365 Copilot works safely and follows the rules. This proactive approach to governance will help you reduce risks and protect sensitive data effectively.

Best Practices for Copilot Deployment

Using Microsoft 365 Copilot safely needs careful planning. Follow these best practices to make sure everything goes well while keeping security and rules in mind.

Training Programs

Training your team is very important to get the most out of Microsoft 365 Copilot. Here are some key parts to include in your training:

Secure Usage Education: Hold training sessions that stress using Copilot safely. Teach users to avoid prompts that might share sensitive information.

Awareness of Policies: Set clear rules for using AI that cover privacy and ethical guidelines. Make sure everyone knows their duties when using Copilot.

Regular Updates: Give ongoing training to keep users updated on new features and security practices. Regular updates help everyone stay aware of possible risks.

By investing in thorough training, you help your team use Copilot well while reducing the chance of data exposure.

Continuous Improvement

Continuous improvement is key to keeping security and compliance in your Copilot deployment. Think about these strategies:

Regular Audits: Check Copilot usage with Microsoft Purview’s audit logs. This helps find any overexposed content and makes sure you follow your data rules.

Feedback Mechanisms: Set up ways for users to report problems or suggest changes. This encourages teamwork and new ideas.

Lifecycle Management: Plan for how to handle sensitive data over time and regularly check access controls. This keeps your data safe as your organization grows.

By focusing on continuous improvement, you can adjust to changing security needs and boost the overall effectiveness of Microsoft 365 Copilot.

Future of AI Governance

As AI technology changes, the rules about using it change too. You need to keep up with these updates to stay safe and follow the rules in your organization.

Evolving Regulations

New trends in AI rules show that strong compliance plans are needed. Regulatory groups are stressing the importance of governance rules for AI technology. Here are some important points to think about:

FINRA’s Regulatory Notice 24-09 says that rules for technology apply to AI. This means you need to create governance rules under FINRA Rule 3110.

ESMA’s Public Statement on AI says that management teams are responsible for decisions made by AI tools. This highlights the need for compliance plans that start right when AI is used.

Both regulators point out that organizations must have strong governance systems to handle risks linked to AI, like data security, bias, and transparency.

To keep up with these changing rules, you should:

Create clear governance rules for using and overseeing AI.

Do regular checks to ensure compliance and accuracy of AI results.

Improve data protection measures to keep sensitive information safe when processed by AI.

Using AI tools like Microsoft 365 Copilot needs careful legal thinking about data privacy and security. To adopt it successfully, you need plans for managing access and encryption to stop data leaks. You also need to teach users about best practices to follow global data privacy laws.

Innovations in Security

New security ideas are very important for good AI governance. Microsoft Purview is key in shaping the future of AI governance in Microsoft 365 Copilot. It improves AI governance by using data classification and policy management features. Microsoft Purview checks and lists data sources that Copilot can access, applying labels to data based on sensitivity and importance. This makes sure that sensitive data is safe by limiting access and using data masking or encryption, keeping compliance and security intact.

Key features of Microsoft Purview include:

Microsoft Purview Compliance Manager has Premium AI templates to help manage AI compliance risks. These templates assist in checking, managing, and reporting on AI compliance.

They ensure ethical AI use and stop the wrong sharing of sensitive data.

Microsoft Purview offers a smart solution for AI governance by combining proactive monitoring with thorough compliance management. Its new Premium AI templates are made to align AI use with organizational rules and standards, making sure that AI interactions are watched and managed well.

By staying ahead of rule changes and using new security ideas, you can make sure your organization effectively governs AI technologies like Microsoft 365 Copilot.

In conclusion, keeping Microsoft 365 Copilot safe is very important for your organization. You need to deal with problems like oversharing and unauthorized access to keep sensitive data safe. Using strong security methods, like end-to-end encryption and strict access controls, will help you follow rules like GDPR and HIPAA.

By creating a culture of awareness and giving proper training, you can improve how users adopt the tool and get the most out of AI tools. Remember, good governance is not just about following rules; it’s about helping your team use Copilot in a responsible and efficient way.

FAQ

What is Microsoft 365 Copilot?

Microsoft 365 Copilot is an AI tool that works with Microsoft 365 apps. It helps you be more productive by automating tasks, giving insights, and improving teamwork in apps like Word, Excel, and Teams.

How does Copilot ensure data security?

Copilot uses many security methods, like encryption, sensitivity labels, and strict access controls. These features keep sensitive data safe and help follow rules like GDPR and HIPAA.

Can I customize Copilot’s settings?

Yes, you can change Copilot’s settings to match your organization’s rules. You can adjust permissions, sensitivity labels, and access controls to make sure it is used safely and correctly.

What training is available for using Copilot?

Microsoft has many training resources for Copilot users. These include online tutorials, webinars, and documents to help you learn best practices and security methods.

How can I monitor Copilot’s usage?

You can check Copilot’s usage through the Microsoft Purview compliance portal. This tool gives you insights into user activities, data changes, and compliance checks, helping you keep good governance.