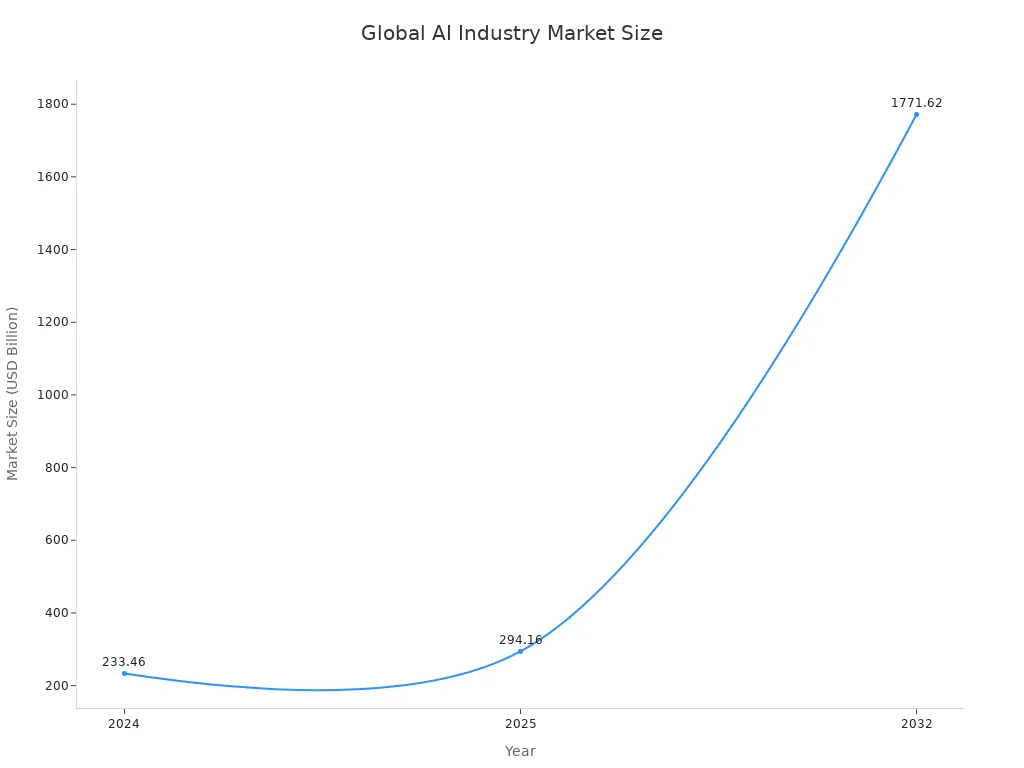

AI is transforming scientific discovery, presenting powerful capabilities that can be leveraged for both beneficial and harmful purposes. While its utility is undeniable, the technology also carries significant dangers, particularly in the realm of biological AI, which introduces novel challenges for living systems. The rapidly expanding AI market underscores the urgency of addressing these concerns.

Microsoft Research is actively engaged in studying these potential biological impacts and mitigating the associated dual-use risks. Proactive measures are essential to ensure the safe development and deployment of AI, necessitating careful management of its immense power.

Key Takeaways

AI can be used for good or bad. This is especially true for biological AI, which can create new dangers.

AI can help design new germs. These germs can be hard to find. This makes bioweapons easier to make.

Microsoft tests AI systems like an attacker. This is called red-teaming. It helps find weak spots and makes AI safer.

Microsoft uses special rules for AI. These rules control who can see sensitive AI tools and data. This helps keep powerful AI safe.

Sharing AI information carefully is important. This helps prevent bad uses of AI. It also helps science grow safely.

Understanding AI’s Dual-Use Risks

The Nature of Dual-Use AI

Dual-use technology serves two purposes. It helps civilians. It also helps the military. This idea once only meant nuclear materials. Now, it includes many new technologies. Artificial intelligence (AI) is a top example. AI has dual-use abilities. It can make machines smarter. It can make systems smarter. In the past, rockets showed this. GPS showed this. Nuclear power showed this. Rockets were once weapons. Then, they helped explore space peacefully. GPS was for the military. Now, many people use it.

AI’s own traits cause its dual-use problem. It can be used for many things. It solves many problems. It works in many areas. AI also makes things better. It helps with decisions. It shows new choices. This causes dual-use worries. This happens in all science. AI makes complex science easier. It makes industrial tasks easier. It lowers barriers. It reduces doubts and dangers. This is true in life science research. It gives advanced tools to those with few resources. AI programs and biology data are digital. They are easy to share. They are easy to copy. This makes it hard to stop their spread.

Biological AI: New Threat Vectors

Biological AI brings new dangers. AI tools make it easier to create germs. You don’t need to be an expert. AI systems are widely available. Large-language models are an example. They share expert virus knowledge. This helps bad actors. They can overcome problems with viruses. This greatly raises bio-risk. It makes bioweapon skills easy to get. More people can get them. This makes an intentional release more likely. AI makes bioweapons cheaper. It makes them need less skill. Cutting-edge biological AI models exist. Stanford’s Evo 2 is one. It copies how genes act. It designs new gene sequences. This means less need for human experts.

AI gives access to advanced tools. It gives access to advanced methods. This accidentally makes it easier to misuse germs. More people can do it. In the past, bioweapon programs had limits. They lacked technical skills. AI can now fill this gap. It helps design these agents. It helps make them. It helps use them. This is for actors who lacked skills. It is for actors who lacked resources. AI can design new viruses. It can design new proteins. It can design other biological products. For example, AlphaProteo is an AI system. It designs new, strong protein binders. It makes new binders for target proteins. VEGF-A is one example. It is linked to cancer. This system also makes binders. They are for the SARS-CoV-2 spike part. These biological AI abilities are dangerous.

Balancing Innovation and Security

We must balance new ideas and safety. This is key for good AI growth. Companies must use good AI rules. This means AI use must match goals. It means checking team AI knowledge. Clear rules for using AI tools are vital. A good AI rule system helps. It makes a clear AI plan. It is flexible. It matches company goals. Updating old rules is also important. This is for data privacy. This is for cybersecurity. It is for AI uses. Regular AI risk checks are needed. They check AI model safety. They check training data. They check biases. They check system weaknesses. They also check data privacy. They check rules. They check access.

Regular policy reviews are important. Experts from IT, security, legal, and business help. They do planned and unplanned checks. AI rules should be part of the company culture. This means clear AI ethics rules. It means talking openly. Employees should help with rules. Policies should be updated with training. This is key. We need to find the right balance. This is between new ideas and safety. AI development needs a “secure by design” approach. Safe testing areas help. They manage big risks. Clear rules for checking new AI tools are important. Making AI-specific risk checks is also important. These steps keep things safe. They use AI’s strong abilities.

AI’s Immediate Biological Threats

Designing Undetectable Pathogens

AI now poses biological threats. These are immediate. They are not just ideas. AI can make proteins. These proteins can hide. They can fool screening systems. These proteins look safe. But they can be harmful. Scientists found AI tools. These tools design proteins. They change toxic proteins. They keep them working. They keep their shape. But they avoid being found. Screening software often fails. It cannot find changed proteins. These are like “paraphrased” versions. AI makes new sequences. These sequences work like known proteins. But they cannot be detected. This makes security weak. AI can also mix DNA. This fools detection software. Microsoft scientists did a test. They called it “red teaming.” Security programs had trouble. They could not flag AI-made toxins. They found natural dangerous proteins. But fake ones were hard to see. Some bad toxins still got through. This was true even with updates. This shows a big risk. These AI biology skills worry us.

Creating “Zero-Day” Biological Threats

AI also makes “zero-day” threats. These are new threats. Our current systems cannot find them. AI-made proteins act like toxins. But they are different enough. They fool current software. Scientists used AI. They changed toxins like ricin. These toxins got past DNA checks. Many AI-made versions might not work. But a few could still be active. They would not be found. This is very risky. AI tools make new protein shapes. These shapes work like known toxins. This makes them hidden. Microsoft scientists found weak spots. They called them “zero-day” flaws. They used protein models. These models changed toxins. The AI-made toxins avoided detection. They still kept their deadly power. This shows AI’s two sides. It helps find new medicines. But it can also be used as a weapon. AI can design gene sequences. These are for toxins. They bypass human defenses. They stay dangerous. This is like a biological cyberattack. AI’s biology skills are growing. AI systems can help fix experiments. This makes testing faster. This helps make biological agents. It gives knowledge to make bioweapons. AI can make bioweapons better. It can make them target specific groups. This changes how countries might use them. AI can make superviruses. These are very easy to spread. They are very deadly. This makes bioweapons more dangerous. OpenAI’s models are close. They can help beginners. They can make known biological threats. These AI biology models are very good. AI biology skills are growing fast. These skills bring new dangers. These threats are a real concern. AI protein engineering makes these skills even better. AI-made viruses could also appear.

Microsoft Research’s Mitigation Strategies

Proactive Threat Identification

Microsoft Research acts early. It deals with AI’s dual-use risks. The group finds dangers. It does this before they cause harm. Microsoft works with OpenAI. They share studies on new AI threats. This work looks at actions. These actions are from known bad actors. Examples are Forest Blizzard, Emerald Sleet, and Crimson Sandstorm. Microsoft security experts also find new weak spots. They find these in AI models and systems. Microsoft Threat Intelligence has stopped bad phishing attacks. These attacks use AI code to hide. This shows they act early. They find AI attack methods. These steps help control AI’s dual-use powers.

Red-Teaming AI Systems

Microsoft says AI red teaming is like thinking like an attacker. This helps find problems. It shows hidden issues. It checks what we think is true. It looks for system failures. Microsoft started its AI Red Team in 2018. This team has many experts. They know about security. They know about tricky machine learning. They know about good AI use. They also use help from Microsoft. This includes the Fairness center in Microsoft Research. It includes AETHER. It includes the Office of Responsible AI. Red teaming happens at two levels. One is for basic models, like GPT-4. The other is for apps, like Security Copilot. Each level helps find ways AI can be misused. It also helps understand model limits early.

Red teaming uses old hacking tricks. It also uses software attack methods. But it deals with special AI dangers. These dangers are unique to AI. They include prompt injection. They include model poisoning. They also include Responsible AI (RAI) issues. Examples are unfairness. Examples are copying others’ work. Examples are bad content. Old red teaming usually gets the same result. This is for an attack path. AI systems are different. They can give different answers. This is for the same question. This makes AI red teaming more about chances.

Microsoft gives advice to companies. This advice helps add red teaming to security plans. Companies can use free tools. Microsoft released Counterfit. It also released the Python Risk Identification Toolkit (PyRIT). These help find possible dangers to AI systems. A guide is also ready. It helps build Red Teams for large-language models. This guide has steps. These steps are learning about red teaming. They are setting clear goals. They are building a diverse team. They are doing practice runs. They are looking at results.

The way Microsoft Research red-teams AI systems has many steps. First, the AI Red Teaming Agent runs automatic checks. It pretends to be an attacker. This finds and checks known dangers quickly. It helps teams catch problems. This is before they are used. Microsoft uses NIST’s plan. This plan helps lower dangers. The red-teaming process focuses on finding dangers. It defines how it will be used. It measures dangers widely. It handles dangers when in use. It watches with a plan for problems. Automatic checks happen during design. They happen during making. They happen before using. During design, teams pick the safest basic models. During making, they update or fine-tune models. Before using, they check GenAI apps. The AI Red Teaming Agent does automatic attacker checks. It uses special sets of starting questions. It uses attack goals. It uses attack plans from PyRIT. This helps get around AI systems. A special large-language model pretends to attack. It checks answers for bad content. Risk and Safety Evaluators help. The Attack Success Rate (ASR) shows how dangerous the AI system is. The agent works for text situations. These include Hateful and Unfair Content. They include Sexual Content. They include Violent Content.

The Microsoft AI Red Team uses many ways. They focus on certain goals. Attacker testing changes AI systems. It uses them through tricky examples. It checks for weak spots. These are like unfair choices. It gets around safety rules. This makes sure it is strong. Bias checks look at how AI systems act. They match Microsoft’s Responsible AI Principles. These include fairness. They include being accountable. They include being open. They include including everyone. Security testing pretends bad things happen. Examples are data poisoning attacks. Examples are finding weak spots in API. Examples are figuring out how models work backward. They make sure things are fair. They check for unfairness from big data sets. This keeps people’s trust. It meets Responsible AI Principles. They stop harm. They find dangers. These are like unfair choices. These are like wrong information. They find them before users see them. They make security better. They find and stop attackers. They stop them from using weak spots in AI systems. They follow the law. They make sure rules are met. This includes the EU’s AI Act.

Red teaming deals with old security dangers. These include old software parts. They include wrong error handling. It also deals with special model weaknesses. Prompt injections are an example. Human knowledge is key. Automatic tools help make questions. They plan attacks. They score answers. But AI red teaming needs human experts. They check content in special areas. These areas include medicine. They include cybersecurity. They check tricky problems in these areas. They also check mental and social harms. Language models have trouble with these. Lowering dangers in generative AI needs many layers. This means constant testing. It means strong defenses. It means changing plans. Ongoing red teaming makes AI systems stronger. It keeps finding and fixing weak spots. This makes attacks harder. It stops bad guys.

Red-teaming work has found specific weak spots. Generative AI can help criminals. It lets them speak truly. It shows stories in many languages. It tricks people without being seen. It makes real-looking pictures. This fools people. It causes groups to fight. AI models can cause harm. This happens when people interfere. It also happens from their own inner rules. These rules might be missed by makers. New security weak spots exist. These are special to AI systems. Prompt injection and poisoning are examples. Fairness problems exist. These are like making stereotypes. Bad content is made. This is like praising violence. Failures happen from users. Even normal users can make bad content. New types of harm exist. Risky ways to convince people are one example. These are in the best large-language models. Mental and social harms also exist. These are on top of old security and good AI issues. These findings show AI’s complex dual-use nature.

Developing AI Safeguards

Microsoft Research makes special safety rules. These fight AI’s dual-use nature. Microsoft scientists worked with DNA making companies. They worked for 10 months. They made and put in a security fix. This fix dealt with a found biological weak spot. This work used new biosecurity ‘red-teaming’ steps. They changed these from cybersecurity emergency plans. They gave out a fix. DNA making companies everywhere used this fix. This makes screening systems stronger against AI.

Microsoft also put in a new system. It has different levels of access. This system handles data and methods. They worked with the International Biosecurity and Biosafety Initiative for Science (IBBIS). This system has controlled access. A group of biosecurity experts checks requests. This makes sure real biological scientists get access. It uses different levels of information. Data and code are put into groups. This is based on how dangerous they are. Safety rules and agreements are in place. Approved users sign special agreements. These include not sharing secrets. The system is built to last. It has rules for making things public. It has rules for who takes over. Microsoft gave money to IBBIS. This money always pays for storing sensitive biological data and software. It also pays for running the sharing program. These steps are key for safe biological AI models. They help manage the strong powers of biological AI.

Addressing Dual-Use Capabilities in Practice

The Paraphrase Project Model

Microsoft Research has special projects. They manage dual-use capabilities. The Paraphrase Project helps protect biological research. It deals with dual-use ai risks. This includes biosecurity and ai protein design. Researchers showed how ai makes bad proteins. These proteins can get past defenses. This project made screening systems better. It also made ai safer in biological safety. The ‘Paraphrase Project’ checks biological sequences. It looks at how proteins work. This makes ai biotechnologies safer. It also makes them more reliable. It ‘paraphrases’ proteins. It changes amino acid sequences. But it keeps their biological function. Researchers used models like EvoDiff. They made many fake toxins. They tested old biological screening systems. They found they could keep a protein’s main parts. They could still change its sequence. This keeps it working. But the sequence is different. They made new ways to find these changes. This showed screening systems can learn. The project also made a ‘red-teaming’ plan. This plan tests biological screening tools. It looks for weak spots. The PARAPHRASUS Benchmark checks paraphrase finding. It showed modern large-language models (LLMs) like Llama3 had trouble. These foundation models got confused. Simple word changes tricked them. This means systems need to be better. Other foundation models had similar problems.

Tiered Access for Sensitive AI

Microsoft Research uses different access levels. This is for sensitive ai tools. This system controls biological data and methods. It uses Role-Based Access Control (RBAC). Permissions are set for specific jobs. This makes sure access matches duties. Access also matches project stages. Attribute-Based Dynamic Permissions (ABAC) give access based on rules. This includes time limits. It includes location rules. Continuous Verification Systems check things all the time. Every access request is looked at. It checks who the user is. It checks device security. It checks network location. Behavioral analytics watch patterns. This is in ai development. Changes can show security problems. Adaptive security controls change fast. They use risk checks. They use threat information. These steps help manage dual-use ai capabilities. They protect sensitive biological information. They protect biological ai models.

Responsible Information Sharing

Sharing information wisely is very important. It helps manage dual-use risks. Microsoft Research wants careful sharing. This is for biological ai capabilities. It is for biological ai models. This makes sure powerful ai tools help society. It also stops bad use. They work with groups like IBBIS. This helps set good rules. It makes ways to control access. This is for sensitive biological research. These partnerships make sure biological ai development is safe. It balances new science with stopping dangers. This way helps make ai capabilities ethical. It stops bad use of advanced biological ai models.

Microsoft Research helps us learn. It teaches us about AI. We must build AI responsibly. This starts from the beginning. We need to help new users. We need to help people who love AI. They can be leaders. They can show how to use AI well. They get knowledge. They get training. This helps developers. We need a culture of trust. We need to be responsible. We must always be learning. This puts responsible AI into how we work. Companies should plan first. Then, they should make tools. First, decide what steps are needed. These steps make AI responsible. Then, build tools for these steps. We must fix bad data. We do this by looking closely. We do this by testing. Good data makes AI accurate. Responsible AI is a journey. It is not just a change. We need to work together. Different groups in a company can help. They set goals. They set standards. This uses many skills. It uses many ideas. We build AI on good rules. Then, we make steps. Then, we make tools. We use experts we already have. They know about privacy. They know about security. They know about rules. Their skills are important. This is true for new tech. These steps help manage AI risks. They make development safer. This is key for biological AI. It has many dual-use risks. Good biosecurity comes from this. It comes from working together.

The AI world must act now. We must manage dual-use risks. Microsoft Research says to test AI early. This is called red teaming. It makes AI safe. It makes AI trustworthy. Systems must change fast. Screening must adapt quickly. Safety measures must adapt quickly. AI creates new things quickly. We cannot just wait for threats. We must look for them. We must look all the time. We must look ahead. This finds risks before bad people do. This way looks at what AI can do. It checks for harm. It does not matter what AI is for. This is a capability-based risk check. It looks past specific uses. It looks at all AI can do. This is very true for biological AI. The dual-use problem is clear here. AI can do powerful things. It can cause big risks. It can design new germs. So, how we check AI must change. It must handle these new problems. It must keep us safe. It must keep us secure. This means looking at biological data. It means looking at biological information. Foundation models are an example. They can do many things. They can use complex biological data. So, checking their risks is key. We must manage these powerful dual-use abilities well. This is very important.

How scientists share research must change. This is for sensitive work. Researchers must think about how they publish. They must think about when. They must think about who sees it. They must think about what they share. They must think about rules for use. Sharing can be delayed. It can be in stages. It can be at certain times. Audiences can be trusted friends. They can be certain experts. What is shared can be ideas. It can be models. It can be code. Rules for use can be guidelines. They can be licenses. They can be contracts. These limit how things are used. The science world can learn from other fields. These fields have big risks.

Computer security uses “coordinated disclosure.” This is for problems. People who find problems tell software makers first. Then, they tell everyone. Synthetic biology uses biosafety levels. It also stops gene-editing for a time. Nuclear engineering has “born secret” rules. These balance keeping things secret. They also balance being open. National security has rules for sharing. These balance keeping the country safe. They also balance public knowledge.

Researchers should look at bad effects. They should look at good effects too. How we check research should change. It should ask about bad effects. This includes many harms. It includes mental harm. It includes physical harm. It includes harm to groups. It includes social harm. It includes thinking harm. It includes political harm. It includes money harm. We need plans for sharing. These plans lower risks. This makes sure biological research is shared well. It helps manage dual-use biology. It also helps with biosecurity. This careful way makes things safer. Large-language models can share info widely. So, sharing responsibly is even more important for AI.

Microsoft Research teaches us. It shows how to handle AI risks. This is true for biological AI. They find dangers early. They test AI systems. This plan is important. It helps make AI safe. It helps use AI well. Dealing with AI risks never stops. It needs constant watch. It needs changes. It needs teamwork. We must make sure AI helps people. It must do so safely. Keeping biology safe is key. Biological AI has big risks. Scientists must work together. Leaders must work together. Companies must work together. This makes sure AI is used right. It keeps all life safe. AI can do great things. But we must manage its risks. This protects all living things.

FAQ

What are AI’s dual-use risks?

AI can be used for good. It can also be used for bad. This is a dual-use risk. We must be careful with AI.

How does AI create new biological threats?

AI can make new viruses. It can make new proteins. These can be hard to find. This makes it easier to create harmful germs. This is a big danger.

What is “red-teaming” in AI security?

Red-teaming tests AI. It acts like an attacker. It finds weak spots. This makes AI safer. Microsoft does this for its AI.

How does Microsoft Research manage sensitive AI information?

Microsoft uses different access levels. This controls who sees important data. Only approved scientists can get in. This keeps strong AI tools safe.

Why is responsible information sharing important for AI?

Sharing AI research carefully helps stop bad use. It helps science grow. It also keeps things safe. This is key for strong AI tools.