Businesses are increasingly seeking greater control over their AI, a significant shift driven by concerns over data privacy and cost efficiency. The need for specialized AI tools often comes with a hefty price tag from vendors, who frequently impose egress fees and charge exorbitant rates for customizations. These factors can create vendor lock-in, making reducing dependency on external AI solutions a critical step for businesses aiming to achieve successful AI adoption. This blog explores strategies for establishing self-managed AI, focusing on enhancing privacy and improving overall AI governance.

Key Takeaways

Using outside AI models too much can cause issues. These issues include data privacy, high costs, and less control.

Companies can make their own AI teams. They can also build their own systems. This gives them more control. It saves money later. It helps them make AI that fits their needs.

Open-source AI models let companies change AI. They lower costs. They keep data safer. They do AI tasks themselves.

Mixing outside and inside AI can work. Use outside models for less secret tasks. Keep important AI work in-house. Always protect data.

Managing data well is key for building your own AI. It makes AI work better. It makes things faster. It helps avoid legal problems.

Understanding Third-Party AI Risks

Data Privacy and Security

Sending private data to outside AI models is risky. It can cause privacy problems. Businesses might have data leaks. They could also break rules. For example, the Clearview AI case set legal rules. This led to lawsuits. These were against the company. They were also against groups using its tech. Recent events show these dangers. A problem in Ollama, an AI tool, leaked user data. Hackers put bad code into an Ultralytics AI model. This infected many computers. These events show we need strong cybersecurity. We also need to watch for security problems.

Bad handling of data often breaks privacy rules. Rules like GDPR, CCPA, and HIPAA are strict. They demand data be safe. They require encryption. They limit who can see data. They need clear rules for data handling. OpenAI was banned in Italy. This was due to GDPR problems in 2023. A hospital got fined. This was because its vendor’s AI leaked patient data. These show big problems from privacy risks. Companies must ensure their AI partners follow these rules.

Vendor Lock-in and Control

Using only one outside vendor limits a business. It limits control over AI projects. This can mean less customization. It can also mean service stops. AI vendors often change prices. OpenAI changed prices in 2024. Sometimes, API costs went down. But new AI models can cost much more. They can be 5-20 times higher. This makes budgets hard to plan.

Hidden costs also appear. This happens with long-term use of outside AI. Different teams use different vendors. This can mean doing the same work twice. It can also mean extra solutions. This creates separate data groups. It causes problems with consistency. This increases risk of breaking rules. An IDC report in 2024 found something. Two out of three companies with many AIs broke data rules. Managing many AI systems is also harder. It creates more cybersecurity problems. This vendor lock-in makes it hard to bargain. It makes reducing dependency tough.

Cost and Unpredictability

Paying for AI based on use can be costly. It can also be hard to predict. Costs for AI services can grow fast. For example, GPT-4.1 charges a lot. It charges up to $3.00 for 1M input tokens. Prompt inflation means more tokens. This happens when prompts get longer. This can make costs much higher. Hidden costs can add up. They can go unnoticed. Every extra token costs more. For apps with 10,000 users daily, a small cost adds up. A $0.02 interaction can cost $6,000 a month. This money risk needs careful handling.

Performance and Customization

Outside AI models often don’t fit business needs. This means they don’t work as well. Ready-to-use AI has limited changes. These changes work within set rules. They might not handle complex logic well. This logic is key for some business uses. Generic AI models are often not flexible enough. They don’t fit specific business cases.

But, smaller, fine-tuned models can work better. They can beat bigger models. This is for special tasks. Studies show fine-tuned 27B models beat GPT-4. They beat it on special tasks by 60%. Custom AI models focus on specific goals. They aim for accuracy or efficiency. This helps them work better. They outperform general models in special cases.

AI Governance and Third-Party Risk Management

It is important to know AI risks. Businesses must fix these risks. This is true for all software steps. An AI governance group should set rules. They should define AI tool use. They should also define allowed data. People must oversee key decisions. Keeping records for every AI system is also vital.

It is hard to check original sources. This is true for outside data. This includes who made it. It includes copyrights. It also includes basic facts. Companies must check documents. These are from outside model providers. They need to know data sources. They need to know how data was collected. Watermarks and digital signs can help. Blockchain can also check sources.

Legal problems arise from using outside AI. This happens without clear data rules. There are complex IP issues. These are about who owns data. They are also about AI-made content. Companies must check contracts. They must talk with outside AI providers. These contracts clarify rights. They clarify duties. They clarify who is responsible. This is for data use and IP ownership. Checking AI vendors is key. This assesses their reputation. It checks their rules. This includes security. It includes data handling. Clear service agreements (SLAs) ensure good service. This full risk plan is vital for AI security. It helps find problems. It lessens threats. This includes bad outside parts. Regular checks and monitoring are key. This helps find such threats.

Strategies for Reducing AI Dependency

Businesses want more control over their AI projects. They build internal teams. They hire skilled people. They invest in AI tools. This helps them rely less on outside companies. It also looks at how open-source tools can help. Companies use third-party models for simple tasks. These tasks are not secret. They also stress good data. This data is key for their own AI work. This means making safe ways for data. It means tracking all data. This is for both internal and external AI.

Developing In-House AI Capabilities

Making your own AI has big benefits. You own it completely. You get solutions made just for you. Outside vendors often have limits. Making your own AI also gives you more control. This is over data and system security.

Hiring and Training AI Talent

Building a strong internal AI team is very important. This means hiring experts. It also means training them all the time. JPMorgan Chase used their own AI tools well. These tools found fraud better. They made trading decisions better. They also improved customer service. The company saved almost $1.5 billion.

JPMorgan Chase saved almost $1.5 billion. They did this by using their own AI tools. These tools helped find fraud. They made trading better. They also improved customer service.

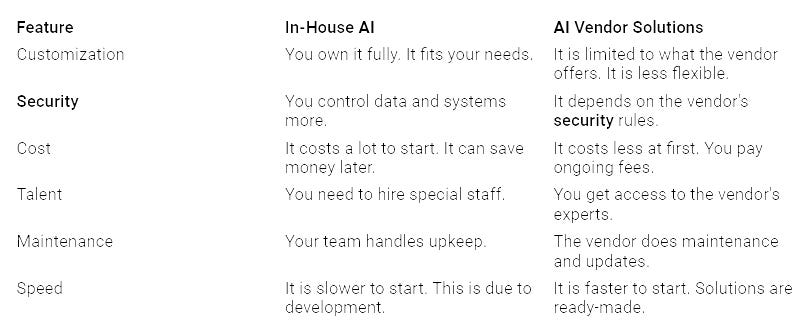

This table shows how in-house AI is different. It compares it to vendor solutions:

Establishing Internal AI Infrastructure

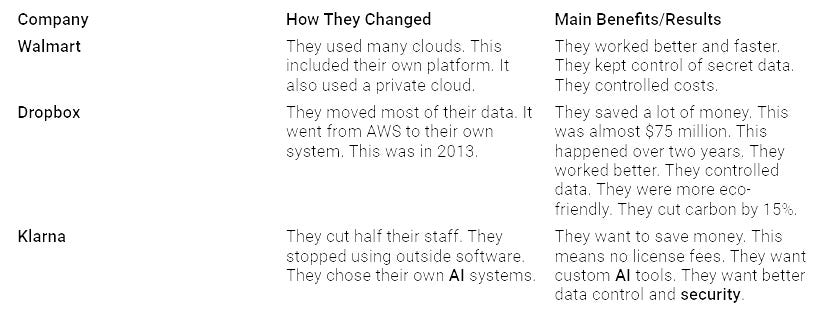

Investing in your own AI tools helps a lot later. It keeps data privacy. It also keeps data control. Some companies moved from outside AI. They built their own AI.

The Washington Post (Heliograf):

Goal: Make readers more interested. Spread content using AI. This is for small and local groups.

Answer: They made Heliograf. It is their own AI tool. It writes and shares content. It made AI newsletters. It sent alerts. It made social media posts. These were for different readers. It also tested headlines. It made content delivery better.

Big Result: It wrote over 850 articles in the first year. Personalized alerts got 17% more clicks. Sponsored content views doubled. Reading time increased by 1.5 times. Newsroom staff had more time.

Other companies also made this change:

These examples show why building your own tools is smart. You get better control. You save money. You get solutions made for you.

Leveraging Open-Source AI Models

Open-source AI models are a good choice. They help businesses rely less on special software. This way gives you freedom. It often costs less too.

Identifying Suitable Open-Source Models

Businesses must find open-source models. These must fit their exact needs. Many groups offer strong AI systems. They also offer ready-made models. These can be a good start for your own work.

Fine-Tuning Open-Source Models

You can fine-tune open-source models. This lets you make them special. You change general models. You make them fit your business. This often makes them work better. This is for special jobs. Fine-tuning also saves a lot of money.

A big law firm used a safe, internal AI model. This was for searching and sorting.

They stopped paying per use.

They cut their yearly AI spending. It went down by $500,000.

They also followed rules better. They were ready for checks.

Open-source models mean no fees per question. This makes costs clear. It avoids surprise fees. It gives smarter data access. Using models only on your own system keeps data safe. It saves money on cloud services. It makes security better.

Using open-source AI models needs care. This is true for business systems. Companies must have strong rules. This helps lower risk.

Zero Trust AI: Access to models or data is blocked. This is until identity is proven. This means limited access. It means strong logins. It means constant checking. This includes managing secrets. It includes Identity and Access Management (IAM). It includes multi-factor authentication (MFA).

Artificial Intelligence Bill of Material (AIBOM): An AIBOM makes things clear. It shows where training data comes from. It shows how models are made. It shows how they work. This helps manage supply chain risk.

Data Supply Chain: Focus on good, complete, and rich data. Business AI Pipeline and MLOps tools help manage everything. This includes setting up, building, and checking.

Regulations and Compliance: Follow AI data rules. Examples are H.R. 5628. This is the Algorithmic Accountability Act. There is also the EU’s Artificial Intelligence Act.

Continuous Improvement and Enablement: Always train all teams on cybersecurity. This is because AI changes. Models change.

Companies must also guard against attacks:

Data Pipeline Attack: Attackers use the data path. They get access. They change data. This causes privacy problems.

Data Poisoning Attack: Bad data is put into training sets. This makes the model work wrong.

Model Control Attack: Bad software takes over the model. It makes wrong decisions.

Model Evasion Attack: Data is changed in real-time. This changes AI answers.

Model Inversion Attack: Attackers work backward. They steal AI training data. They steal personal info.

Supply Chain Attack: Attackers hack outside software parts. This is during model training. Or during use. They put in bad code. They control the model.

Denial of Service (DoS) Attack: AI systems get too many requests. They slow down.

Prompt Attack: Tricky ways are used. They trick users. They get secret info.

Unfairness and Biased Risks: AI systems give unfair results. They show prejudice. This causes ethical and legal problems.

Rules for AI governance are key. They set security standards. This is for data privacy. It is for managing assets. It is for ethical rules. These rules handle AI‘s special risk. They handle open-source parts. An AI Bill of Materials (AI-BOM) shows everything. It lists all AI parts. It lists what they depend on. This helps with hidden AI risk. Companies must check outside AI models and vendors carefully. They should use automatic security tests. This lowers risk.

Hybrid Third-Party Approaches

A hybrid way means using some third-party AI services. This is for tasks that are less secret. Or tasks not central to the business. This helps reduce reliance. It still uses outside help when needed.

Segmenting AI Workloads

Businesses can split up AI tasks. They send less important data to outside AI models. They keep secret or key AI tasks in-house. This lowers risk.

Data Anonymization Techniques

When using outside AI services, hiding data is vital. It keeps secret info safe. Good ways to hide data include:

Masking: You replace original data. You use special characters. For example, ‘XXX-XXX-XXXX’ for phone numbers.

Hashing: You use a special math function. It turns data into a fixed string. This is good for consistent hiding.

Encryption: You scramble data with codes. It cannot be read without keys. It can be unscrambled. It keeps data safe when moving or stored.

Generalization: You make data less specific. This lowers the risk of finding someone. For example, use birth year, not full date.

Suppression: You remove sensitive info completely. This is from a dataset.

Perturbation: You add noise or changes to data. This makes individual records uncertain.

Synthetic Data Generation: You create fake datasets. They look like real data. But they have no real personal info.

Pseudonymization: You replace real data with fake names. You can change it back if you have the key. This is for controlled places.

Contextual Replacement: You make new data. It fits the context. It replaces sensitive data. Tools like Faker make realistic fake data.

Recognition and Replacement Pipeline: This is a two-step process. First, it finds named things. Then, it replaces them. It uses a chosen hiding method.

These methods keep privacy. This is true even when outside services are needed.

Investing in Data Governance

Good, well-managed data is the base. It is for making your own AI. Strong data governance pays off a lot.

Better data quality means better choices. It means less manual work.

You save money. This is from fewer data leaks. It is from fewer fines.

Easier data management makes you more productive. It helps with new ideas.

AI starts faster. Operations are more efficient.

How to measure better data quality:

Number of data errors found and fixed.

Fewer duplicate records.

Percent of data that meets quality rules.

Time spent cleaning data. This is before and after governance.

Better data accuracy scores over time.

How to measure following rules:

Fewer rule breaks.

Percent of data that meets rules.

Investing in data governance helps AI start faster. It makes operations better. It helps make better choices. More reliable AI results make you more productive. It helps new ideas grow. It cuts down on repeated tasks. This lets teams focus on insights. They do not scrub data. This full way of managing data is key. It is for AI security and privacy. It makes the whole supply chain stronger.

Overcoming Transition Challenges

Businesses face problems. They want to switch to their own AI. These problems include big costs. They also lack skilled people. They need to make sure their AI works well. It must also grow easily.

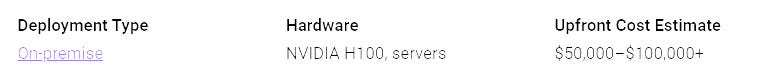

Investment and Resources

Building your own AI team costs a lot. A small team can cost $400,000. It can cost over $1 million. This depends on their skills. It also depends on where they are. A single NVIDIA H100 GPU costs about $30,000. This is key for big AI models. Cloud services are flexible. They charge by the hour. But, setting up your own system costs a lot at first. Yet, it can save money later. This is for your own AI teams.

Big AI projects can cost $500,000. They can cost over $5,000,000. This money risk needs good planning.

Skill Gaps and Talent

It is hard to find AI experts. Companies need an AI plan. They need clear goals. They must get data ready. They need better tech tools. Training HR teams helps. Learning new things all the time is good. Trying small projects is important.

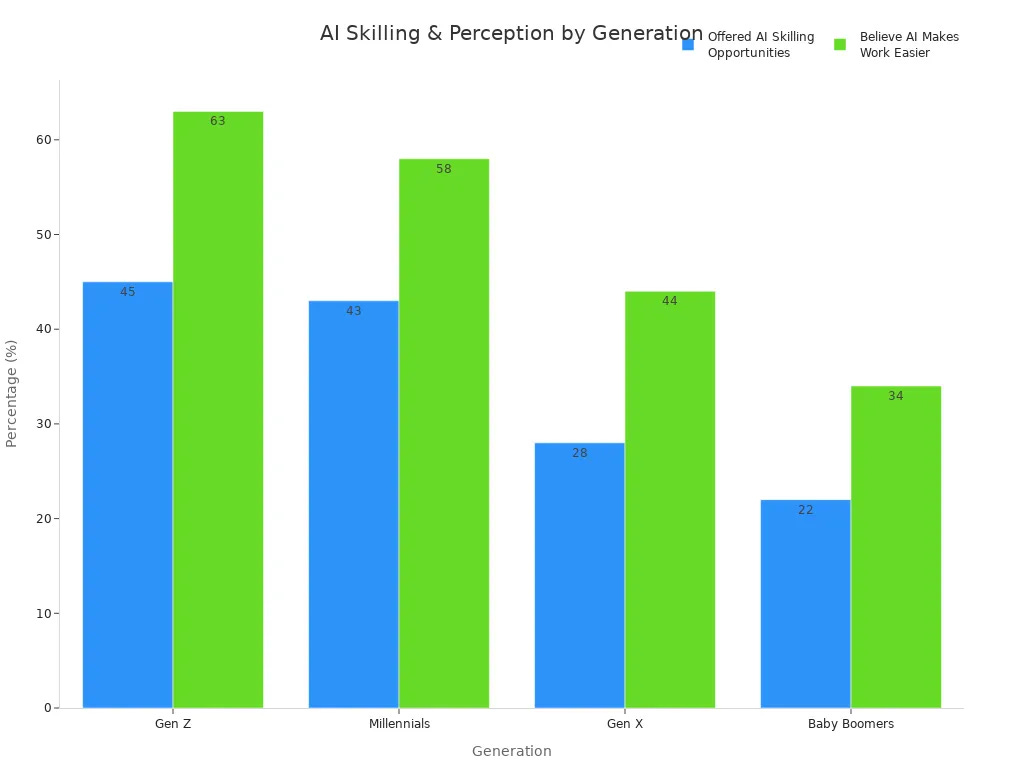

The AI job market lacks skills. Men say they know more about AI. They get more training. Younger workers get more AI training. This is true for Gen Z and Millennials. Older people get less.

Randstad CEO, Sander van ‘t Noordende, said this: “Not enough skilled people is a big problem. Everyone needs fair chances to learn. They need tools and jobs. This is key to fix this. But, AI demand keeps growing fast. The AI fairness gap is also growing. We must see this. We must act. Otherwise, too few workers will be ready. This will cause more shortages everywhere.”

Companies train their workers. They make learning a habit. They use AI tools to help. They have full training plans. They also have mentors.

Model Performance and Scalability

Making sure AI models work well is hard. They must meet business needs. Tests often use school scores. They do not use real business uses. This can make models use too much memory. They can also be too slow. Generative AI models are tricky. Small changes in words can change how they act. So, one test score is not enough. Keeping them safe from attacks is key. Getting AI into old computer systems is another problem.

Companies can make AI models grow well. They use cloud AI tools. They use MLOps. They use transfer learning. They use federated learning. They focus on good data systems. Making AI models work better helps. Making them easy to understand also helps. Building AI teams with different skills is vital. Investing in AI model checks is also key.

Reducing reliance on outside AI models helps businesses a lot. It gives them new ideas. They get an edge over others. They become stronger. Companies that control their own AI create special data. They make smart computer programs. This makes some companies much better than others. Using AI early helps a lot for a long time. Taking charge of AI is a long trip. It is not a quick stop. It needs a careful plan. Businesses should check how much they use outside AI. They should plan to use their own AI more. This helps them grow. It helps them control their AI work.

FAQ

What are the good things about using less outside AI?

Businesses get more control. This is over their data. It is also over security. Costs become easier to guess. They avoid being stuck with one seller. Models work better for their needs. This helps them create new things. It gives them an advantage.

How can businesses start making their own AI?

Businesses should hire smart people. They should teach them about AI. They need to set up their own AI systems. This means safe ways for data. It also means computers to run AI. This gives them full control. It gives them special solutions.

How do open-source AI models help use less outside AI?

Open-source models give freedom. They also save money. Businesses can change them. This makes them fit special jobs. They work better than general models. They also keep data safer. This is because work stays inside.

Can businesses use a mix of outside and inside AI?

Yes, they can split up AI jobs. They use outside models for simple tasks. They keep important AI work inside. Hiding data helps keep it private. This is when using outside services.